Chapter 22

Advanced Prompt Engineering Techniques

"Advanced prompting techniques are essential for unlocking the full potential of language models, allowing us to guide their behavior and enhance their performance in increasingly sophisticated ways." — Yann LeCun

Chapter 22 delves into advanced prompt engineering techniques for large language models, focusing on innovative methods that extend beyond traditional few-shot and zero-shot prompting. It covers Chain of Thought Prompting, which enhances model interpretability by generating intermediate reasoning steps; Meta Prompting, which influences model behavior through strategically crafted prompts; Self-Consistency Prompting, which ensures reliability by generating multiple outputs for consistency; and Generate Knowledge Prompting, which leverages prompts to elicit specific knowledge. Additionally, it explores advanced techniques like Prompt Chaining, Tree of Thoughts, Automatic Prompt Engineering, Active-Prompt, ReAct Prompting, Reflexion Prompting, Multi-Modal Chain of Thought, and Graph Prompting, each offering unique ways to improve model performance and adaptability using the llm-chain Rust crate.

22.1. Chain of Thought Prompting

Chain of Thought (CoT) prompting is an advanced technique in natural language processing designed to encourage large language models (LLMs) to generate intermediate reasoning steps before arriving at a final answer. This approach is invaluable in scenarios demanding multi-step reasoning, logical deduction, or the breakdown of complex information, as it leads the model to "think aloud." By emulating human problem-solving methods, CoT prompting enhances both the accuracy and interpretability of model outputs, enabling the model to achieve clearer and more reliable conclusions. Structuring prompts to request these intermediate steps enables models to simulate a coherent thought process, improving transparency and enabling users to follow the model's logic more closely, which is particularly beneficial for applications where decision paths must be clear and justified.

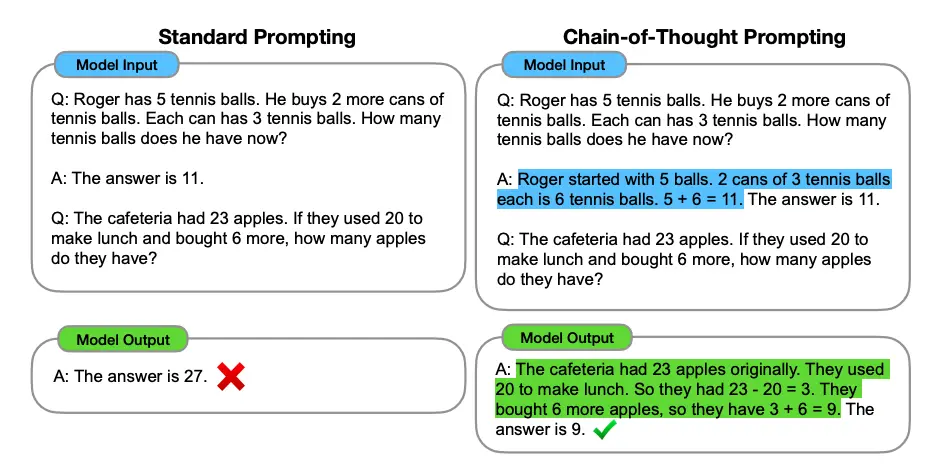

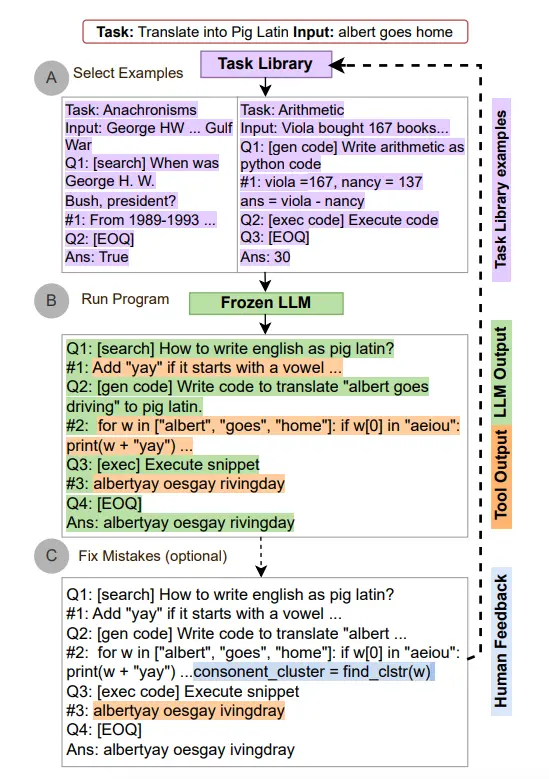

Figure 1: Illustration of CoT from https://www.promptingguide.ai.

In CoT prompting, a model’s output is structured to follow a sequence of reasoning steps, represented mathematically as $R = (r_1, r_2, \ldots, r_n)$, where each $r_i$ is an intermediate step leading to the final answer $A$. This structure transforms the output into a conditional sequence, defined as $P(A | X) = \prod_{i=1}^n P(r_i | r_{1:i-1}, X)$, where $X$ is the initial input. Each step $r_i$ becomes a layer in the decision-making path, allowing users to observe and verify each component of the reasoning chain. This structure not only enhances transparency but also allows for targeted corrections if errors are found in any step, increasing robustness and reliability in high-stakes applications.

Beyond improved performance, CoT prompting is transformative for interpretability, especially in fields like law and healthcare where transparent decision paths are essential. In legal contexts, for instance, CoT prompts can guide a model to elaborate on each principle or case precedent it considers, offering a transparent view of the rationale behind a judgment. Similarly, in healthcare, CoT prompts can help the model explain each inference based on patient history and test results, giving clinicians insight into each step that contributed to the final diagnosis. This interpretability fosters trust in the model’s outputs and allows professionals to make more informed decisions.

Implementing CoT prompting in Rust is facilitated by the llm-chain crate, which supports prompt chaining to create intermediate reasoning steps within model responses. The example code below shows a CoT setup for a math problem, where each prompt explicitly directs the model to handle individual calculations step-by-step, ensuring clarity and reducing the risk of cumulative errors.

[dependencies]

candle-core = "0.7.2"

candle-nn = "0.7.2"

ndarray = "0.16.1"

petgraph = "0.6.5"

tokenizers = "0.20.3"

reqwest = { version = "0.12", features = ["blocking"] }

rayon = "1.10.0"

regex = "1.11.1"

llm-chain = "0.13.0"

llm-chain-openai = "0.13.0"

tokio = "1.41.1"

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use tokio;

// CoT Prompt Setup: Prompt for multi-step reasoning

fn chain_of_thought_prompt() -> Step {

let prompt_content = "Solve the problem step-by-step:\n\n\

Problem: A train travels 60 miles in 1 hour, then 80 miles in 2 hours. What is the total distance covered?\n\

Step 1: Determine the distance covered in the first hour.\n\

Step 2: Determine the distance covered in the next two hours.\n\

Step 3: Sum the distances from each step to find the total distance.\n\

Solution:";

let template = StringTemplate::from(prompt_content);

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Initialize options with the API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Execute CoT Prompting

let cot_chain = Chain::new(vec![chain_of_thought_prompt()]);

let cot_result = cot_chain.run(parameters!(), &exec).await?;

println!("Chain of Thought Result: {}", cot_result.to_string());

Ok(())

}

This Rust code demonstrates a structured CoT prompt guiding the model through each logical step for solving a math problem. Each stage of reasoning is laid out in the prompt, leading the model to handle individual calculations before reaching a conclusion. By leveraging the llm-chain crate, developers can implement such multi-step logic efficiently, transforming complex problems into smaller, manageable components. This setup not only enhances transparency but also ensures that each reasoning step is clear and reduces potential errors by isolating logical steps, making it suitable for applications where precision is crucial.

Evaluating CoT prompting effectiveness requires a mix of quantitative metrics like accuracy scores and qualitative assessments of reasoning clarity, coherence, and logical flow. Rust's performance and concurrency support make it ideal for automating evaluations across extensive datasets, enabling systematic testing of different CoT strategies. Advanced real-world applications include financial analysis, educational tutoring, and legal decision-making, all of which benefit from CoT's ability to provide a structured approach to complex problems. Emerging trends in CoT, such as dynamic CoT and reinforcement learning integration, enable adaptive and optimized reasoning, offering exciting potential for responsive, transparent, and high-stakes AI applications.

The real-world applications of CoT prompting span a wide range of fields. In financial analysis, for example, CoT prompting can break down complex calculations and comparisons needed for risk assessment, enabling auditors or analysts to trace each financial inference made by the model. In educational settings, CoT prompting can assist students in learning by generating step-by-step solutions for math problems, programming challenges, or scientific explanations. By explaining each step, the model serves not only as an answer generator but also as a tutor, guiding learners through the logic behind each answer. These applications highlight the versatility of CoT prompting in situations that require a structured, transparent approach to complex problems.

One of the emerging trends in CoT prompting is dynamic CoT, where intermediate steps are not pre-defined but generated adaptively based on user queries or model feedback. For instance, in a dynamic CoT setup, a user query might initiate a chain of reasoning, with the model generating each subsequent step based on the results of previous steps. This approach leverages feedback loops to ensure that the CoT remains relevant and coherent, particularly in situations where initial assumptions or partial conclusions may need adjustment. Implementing dynamic CoT systems in Rust benefits from Rust’s concurrency support, allowing for real-time feedback and adaptive adjustment in interactive applications.

Another trend involves the integration of reinforcement learning into CoT prompting to optimize intermediate reasoning paths. Here, CoT prompts are fine-tuned through feedback loops, where desirable reasoning sequences are rewarded, refining the model’s logical structure over time. Reinforcement learning further strengthens CoT’s potential for high-stakes applications, as models learn to prioritize logical clarity and coherence based on evaluated outcomes. Rust’s performance characteristics make it an ideal choice for such adaptive systems, as it allows developers to handle continuous feedback efficiently, refining CoT structures dynamically in response to live user interactions.

Ethical considerations are essential in CoT prompting, especially when the generated reasoning affects decision-making in areas like healthcare, finance, or criminal justice. For example, bias in intermediate reasoning steps could skew the model’s conclusions, potentially leading to incorrect or unfair outcomes. To mitigate such risks, CoT prompts can be designed with fairness and transparency as key principles, encouraging balanced reasoning steps and explicitly addressing potential biases within the chain of thought. By carefully monitoring and refining CoT prompts, developers can ensure that outputs are fair, balanced, and reflective of diverse perspectives.

In conclusion, Chain of Thought prompting represents a significant advancement in improving the transparency, accuracy, and interpretability of LLMs. By guiding models through intermediate reasoning steps, CoT prompts align AI-driven problem-solving with human logic, making outputs more reliable and understandable. Rust’s llm-chain crate offers a powerful toolkit for implementing and customizing CoT prompts, enabling developers to structure prompts for even the most complex reasoning tasks. As research in CoT prompting continues to progress, dynamic CoT and reinforcement learning integration are emerging as promising avenues, paving the way for increasingly adaptive and responsive CoT systems. By incorporating best practices and ethical considerations, developers can leverage CoT prompting to deploy robust, transparent AI solutions across diverse industries, setting a new standard for responsible AI.

22.2. Meta Prompting

Meta prompting is a sophisticated technique in prompt engineering that allows developers to influence not just the content but also the tone, style, adaptability, and overall behavior of language model responses. By moving beyond simple direct prompts, meta prompting creates a layer of abstraction where initial prompts define specific guidelines, instructions, or criteria that the model will follow when generating subsequent responses. This approach enables the model to dynamically adjust its output based on context, user intent, or specific requirements, fostering more nuanced, adaptable interactions. Through meta prompting, developers can effectively guide the model to meet complex objectives, such as adopting a particular tone, varying response complexity, or prioritizing creativity, which enhances the model's ability to handle intricate or context-sensitive tasks.

Meta prompting, as outlined by Zhang et al. (2024), is characterized by a structure-oriented approach that emphasizes the format and pattern of prompts, focusing more on the organization of problems and solutions than on specific content. It leverages syntax as a guiding framework, allowing the prompt's structure to inform the expected response. This technique often incorporates abstract examples that illustrate problem-solving frameworks without delving into details, providing a versatile and adaptable foundation applicable across diverse domains. By emphasizing categorization and logical arrangement, meta prompting applies a categorical approach rooted in type theory, which aids in structuring components logically within a prompt. These characteristics make meta prompting particularly effective in producing flexible, context-aware responses that align with a wide variety of complex tasks.

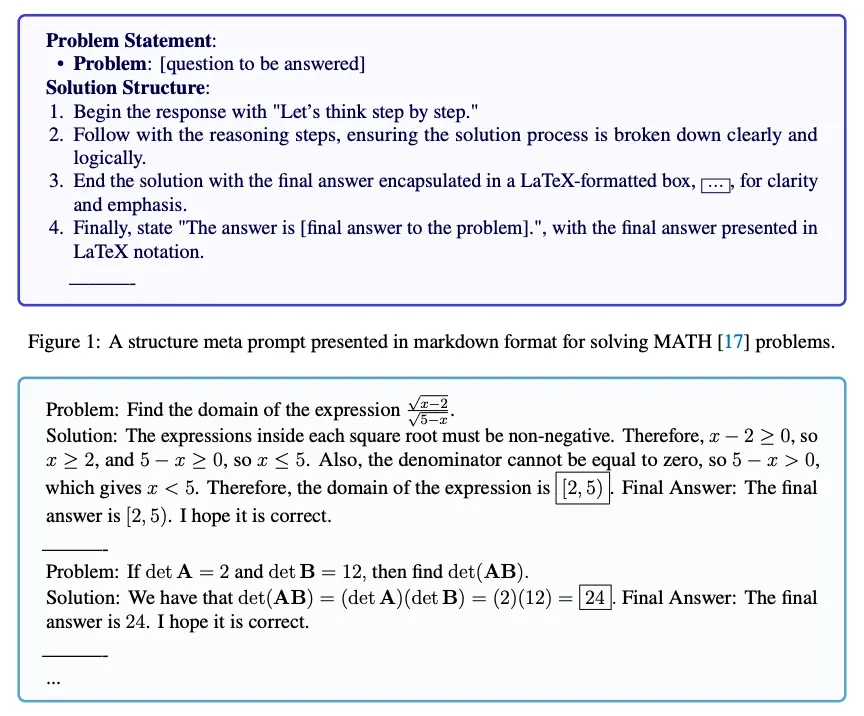

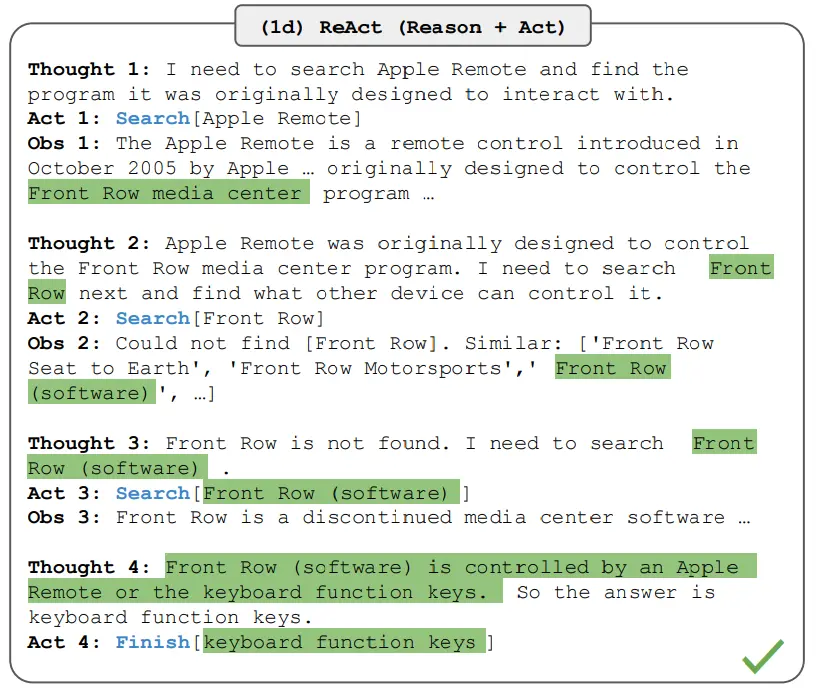

Figure 2: Illustration of Meta prompting from https://www.promptingguide.ai.

In mathematical terms, we can define meta prompting as an iterative, self-referential function where a prompt $P$ does not directly yield a response $R$ but instead produces a refined prompt $P'$ that shapes subsequent model outputs. If $f$ is the function representing the model’s output generation, then in meta prompting, we aim to design $P$ such that $f(P) = P'$ and $f(P') = R$. This approach enables developers to establish a multi-layered interaction with the model, where prompts themselves undergo transformation before arriving at a final output. By controlling the way these prompts influence one another, we can create a structured pipeline of responses that adapts dynamically to varying requirements, fostering more nuanced and targeted interactions.

Meta prompting is particularly useful in applications that require adaptability and subtlety in response generation. For instance, in educational applications, a meta prompt could first instruct the model to adopt a tone and vocabulary level appropriate for a young audience. Once this style is set, the model could then respond to specific questions using simplified language, aligning with educational objectives. In customer service, meta prompting can be used to influence responses based on customer emotion or urgency. An initial meta prompt might detect the sentiment in a customer’s message and guide the model to respond empathetically in cases of negative sentiment, thus shaping responses that align with customer support protocols. The adaptability of meta prompting supports applications across industries, enabling a level of interaction that goes beyond simple, single-stage prompts.

The llm-chain crate in Rust offers a robust framework for implementing meta prompting, allowing developers to define and manage prompt sequences dynamically. The following Rust code demonstrates how to implement a meta prompting approach using llm-chain. In this example, the initial meta prompt shapes the model’s response style by setting a specific tone before the actual question is asked. This example can be particularly useful in customer support or educational settings where tone and approachability are key.

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use tokio;

// Meta Prompt Setup: Defining the tone and style for responses

fn meta_prompt_tone() -> Step {

let template = StringTemplate::from("For this conversation, adopt a friendly and conversational tone suitable for young students.");

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

// Main Prompt Setup: Crafting the response based on the meta prompt

fn question_prompt() -> Step {

let template = StringTemplate::from("Explain the concept of gravity in simple terms.");

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Initialize options with the API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Create a chain with both the meta prompt for tone setting and the main question prompt

let chain = Chain::new(vec![meta_prompt_tone(), question_prompt()]);

// Execute the chain

let response = chain.run(parameters!(), &exec).await?;

println!("Response with Meta Prompting: {}", response.to_string());

Ok(())

}

The program defines two functions, meta_prompt_tone and question_prompt, to format and structure the prompts. The meta_prompt_tone function initializes the conversational tone using StringTemplate, which is then converted into a Step that serves as the first part of the prompt sequence. The question_prompt function formats the question about gravity, also as a Step. These steps are combined in a Chain to ensure that the model first considers the tone-setting prompt before processing the question prompt. In the asynchronous main function, the chain is executed using an API key and prints the model’s response, reflecting the conversational tone established by the meta prompt. This setup demonstrates how meta prompting can dynamically adjust a model’s output to align with specific stylistic requirements.

Meta prompting’s power lies in its ability to modulate and customize the model’s behavior in real-time, making it well-suited for applications requiring adaptability. In dialogue systems, for instance, a meta prompt can guide the model to follow predefined conversational rules or adhere to a specific user’s preferences. In creative applications, meta prompting can allow the model to experiment with various narrative voices or genres, guiding the response format while maintaining a creative edge. This adaptability is invaluable in scenarios like interactive storytelling, where user engagement and contextual relevance are crucial. The control over tone, style, and approach provided by meta prompting transforms LLMs into flexible conversational agents capable of adapting to different roles or user expectations on demand.

However, implementing meta prompting requires careful testing and refinement to ensure that prompts produce the intended behavior consistently. Variations in prompt phrasing or content may lead to shifts in the model’s response style, especially if prompts lack specificity. To address this, iterative refinement and A/B testing can be employed to gauge the effectiveness of different meta prompt configurations. Evaluating meta prompts involves both qualitative assessments—examining the coherence and tone of outputs—and quantitative methods, such as measuring user engagement or sentiment. By analyzing these metrics, developers can identify which prompt structures yield the most reliable and context-appropriate responses, fine-tuning meta prompting strategies over time.

In industry applications, meta prompting has demonstrated substantial advantages. In automated content creation, for instance, a publishing platform implemented meta prompting to tailor articles to different audience demographics. The meta prompts were designed to adapt tone and complexity, making content more accessible to various reader segments without altering core information. Similarly, in virtual assistant applications, meta prompting has been used to adjust the assistant’s style and formality based on user interaction history, creating a more personalized and responsive experience. These real-world examples highlight the practical benefits of meta prompting in enhancing user engagement and tailoring responses to meet diverse needs.

Emerging trends in meta prompting research are focused on further enhancing prompt adaptability and responsiveness. One promising direction is dynamic meta prompting, where meta prompts are adjusted in real-time based on feedback or contextual changes within an ongoing interaction. For instance, a virtual assistant might adjust its tone mid-conversation based on detected shifts in user sentiment, switching from a neutral tone to a more empathetic one. This dynamic adaptability can be achieved by coupling meta prompts with real-time sentiment analysis, where Rust’s high concurrency support is advantageous in handling multiple feedback signals efficiently. By continuously adapting to interaction contexts, dynamic meta prompting can create more fluid and responsive conversational systems.

Another frontier in meta prompting is reinforcement learning, where models are trained to prioritize certain response styles or behaviors based on contextual relevance and user feedback. By assigning rewards to responses that align well with meta prompt objectives, reinforcement learning algorithms can fine-tune model outputs, optimizing for coherence, tone, and engagement over time. Rust’s performance efficiency and stability make it well-suited for developing reinforcement learning pipelines for meta prompting, supporting the complex feedback-driven training loops required by such systems.

Ethical considerations in meta prompting are also paramount, especially in scenarios where tone or response style could affect user perceptions or emotional well-being. In customer support, for example, a meta prompt that unintentionally encourages an overly casual tone could risk misinterpretation in serious interactions, leading to potential user dissatisfaction. To address this, developers should design meta prompts with sensitivity to different contexts, ensuring that tone and style adjustments align with user expectations. Transparency around the use of meta prompts is equally essential, particularly in applications like social media moderation or automated content creation, where prompt-driven behavior can influence public discourse. Implementing safeguards within the meta prompting framework, such as tone-checking prompts or response validation steps, can mitigate risks and ensure ethical deployment.

In summary, meta prompting enhances the adaptability and contextual sensitivity of LLMs by enabling prompts to influence the model’s behavior, style, and tone dynamically. Through Rust’s llm-chain crate, developers can implement flexible meta prompts that cater to specific interaction needs, guiding the model’s responses based on predefined or real-time context. Meta prompting not only improves user engagement but also enables applications across diverse fields, from customer service to creative content generation. As research advances in dynamic and reinforcement-based meta prompting, we can expect even greater adaptability and precision in AI-driven interactions. This technique offers a powerful tool for building AI systems that resonate with users, setting new standards for interactive, contextually-aware applications in natural language processing.

22.3. Self-Consistency Prompting

Self-consistency prompting is an advanced and powerful technique in prompt engineering, proposed by Wang et al. (2022), that aims to enhance the reliability and accuracy of large language model (LLM) outputs, particularly in chain-of-thought (CoT) prompting tasks. This method seeks to improve upon the traditional single-response generation approach by replacing the naive greedy decoding typically used in CoT prompting with a strategy that samples multiple, diverse reasoning paths. By leveraging few-shot CoT, self-consistency prompting generates multiple responses to the same prompt, exploring varied reasoning paths to arrive at the answer. The most consistent answer across these different reasoning paths is then selected as the final output, helping to address common challenges in LLM outputs, such as variability due to language nuances, minor prompt adjustments, or the inherent probabilistic nature of generative models.

Self-consistency prompting significantly boosts the performance of CoT prompting on tasks that require logical deduction, arithmetic calculations, and commonsense reasoning. By embracing the natural variability of LLM responses and using it as a mechanism to confirm accuracy, this technique reduces errors, minimizes fluctuations in output, and ultimately leads to more dependable results. Self-consistency is particularly advantageous in high-stakes applications—such as healthcare, finance, and legal services—where reliable, consistent, and precise model outputs are critical for decision-making. This approach not only enhances the robustness of responses but also aligns well with applications where accountability and interpretability are paramount, marking self-consistency as a key innovation in the quest for dependable AI systems.

Mathematically, self-consistency can be represented as a voting or consensus mechanism among multiple responses generated by the model. Let $R = \{r_1, r_2, \ldots, r_n\}$ be a set of $n$ responses generated for a given prompt $P$. In self-consistency prompting, the final output $R_{\text{consistent}}$ is selected based on the frequency or coherence of these responses. One approach is to measure the similarity or overlap among responses and choose the response that aligns most closely with the majority. This can be represented as:

$$ R_{\text{consistent}} = \operatorname{arg\,max}_{r \in R} \sum_{i \neq j} \text{similarity}(r_i, r_j) $$

where $\text{similarity}(r_i, r_j)$ denotes a metric, such as cosine similarity or lexical overlap, that quantifies the agreement between two responses. By selecting the most consistent response, self-consistency prompting acts as a quality filter, reducing the likelihood of producing outlier or biased outputs.

The implementation of self-consistency prompting in Rust is facilitated by the llm-chain crate, which enables the chaining of multiple prompt generations, allowing developers to efficiently create multiple responses and evaluate their consistency. In the following Rust code example, we demonstrate how to implement self-consistency prompting by generating several responses to the same question and selecting the response with the highest similarity score among the set.

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use llm_chain::traits::Executor; // Import the Executor trait

use tokio;

use std::collections::HashMap;

// Function to create a prompt for the specified question

fn create_prompt() -> Step {

let prompt_content = "What are the key benefits of using Rust for system programming?";

let template = StringTemplate::from(prompt_content);

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

// Function to generate multiple responses for self-consistency

async fn generate_responses(prompt: &Step, exec: &impl Executor, num_responses: usize) -> Vec<String> {

let mut responses = Vec::new();

for _ in 0..num_responses {

let chain = Chain::new(vec![prompt.clone()]);

if let Ok(response) = chain.run(parameters!(), exec).await {

responses.push(response.to_string());

}

}

responses

}

// Function to evaluate and select the most consistent response

fn select_consistent_response(responses: &[String]) -> Option<String> {

let mut frequency_map = HashMap::new();

// Count frequency of each response

for response in responses {

*frequency_map.entry(response.clone()).or_insert(0) += 1;

}

// Select response with highest frequency

frequency_map.into_iter().max_by_key(|&(_, count)| count).map(|(response, _)| response)

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Initialize options with your API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Create the main prompt for generating responses

let prompt = create_prompt();

// Generate multiple responses asynchronously for self-consistency

let responses = generate_responses(&prompt, &exec, 5).await;

// Select the most consistent response

if let Some(consistent_response) = select_consistent_response(&responses) {

println!("Most Consistent Response: {:?}", consistent_response);

} else {

println!("No consistent response found.");

}

Ok(())

}

In this example, the generate_responses function generates multiple responses for a given prompt, storing each in a vector. The select_consistent_response function then identifies the response with the highest frequency, selecting the most consistent output as the final answer. This code implementation is particularly useful for questions with factual answers or where consistent response phrasing is crucial. By selecting the most frequently generated response, this approach reduces the impact of anomalies or one-off answers, providing a more stable and reliable output.

Self-consistency is invaluable in applications where the accuracy and stability of answers are critical. In the legal domain, for example, self-consistency prompting can reduce ambiguity in contract analysis or legal interpretations, where varying interpretations of the same prompt can lead to significant differences in understanding. Similarly, in healthcare, where patient outcomes may hinge on the accuracy of model-driven advice, self-consistency prompting helps ensure that recommendations are dependable. These high-stakes contexts benefit greatly from a consistency-focused approach, as it helps mitigate the risks associated with random variability or potentially misleading responses.

Evaluating the effectiveness of self-consistency prompting requires both qualitative and quantitative metrics. From a quantitative perspective, accuracy can be assessed by comparing self-consistency outputs with ground truth data or known answers, while stability can be measured by the reduction in response variability across multiple prompt trials. Qualitatively, human evaluators can examine whether consistent responses demonstrate greater clarity, relevance, and coherence. In practice, evaluation can also involve comparisons with single-response prompting to confirm improvements, using metrics like response similarity scores or manual accuracy assessments.

In terms of industry applications, self-consistency prompting has shown impressive results in fields such as customer service, where the consistency of answers across repeated queries can impact user trust. For instance, a company used self-consistency prompting to enhance the accuracy of its customer support chatbot, achieving uniformity in responses to frequently asked questions. Another application in financial services involved using self-consistency prompting to review investment summaries, reducing the risk of conflicting advice in portfolio recommendations. These real-world implementations underscore the utility of self-consistency prompting in reinforcing accuracy and reliability, critical factors in customer-facing roles where brand reputation depends on dependable responses.

Emerging trends in self-consistency prompting focus on refining the methods of response selection and exploring adaptive consistency mechanisms. One area of research is dynamic weighting, where response consistency is evaluated based on contextual relevance or user feedback. For instance, rather than simply selecting the most frequent response, a dynamic weighting approach could prioritize responses that align with specific keywords or domain-specific concepts. Rust’s high performance and memory safety features make it ideal for building these adaptive self-consistency systems, which require efficient processing of feedback and contextual adjustments.

Another promising trend is integrating reinforcement learning into self-consistency prompting, where models are fine-tuned based on the quality of their consistent outputs. In this approach, models receive rewards for generating responses that align closely with previously consistent answers, gradually reinforcing preferred patterns of response. Rust’s concurrency capabilities facilitate the implementation of reinforcement learning loops, allowing for real-time adjustments to prompt structures or selection criteria based on user feedback. This integration of reinforcement learning enhances the model’s ability to self-correct over time, producing outputs that align more reliably with established consistency criteria.

Ethical considerations in self-consistency prompting are crucial, especially in applications that affect decision-making in sensitive areas. For instance, consistency in financial advice or medical recommendations must be aligned with best practices and ethical standards to prevent the model from producing responses that are consistently wrong or biased. Additionally, there is a risk of over-reliance on consensus, where the selection of consistent responses may inadvertently mask outlier perspectives that could offer valuable insights. To address these risks, developers should implement monitoring mechanisms to ensure that self-consistent responses remain accurate and ethically sound, especially in applications with potentially life-altering impacts.

In conclusion, self-consistency prompting enhances the reliability and accuracy of large language model outputs by selecting the most consistent response from multiple generated outputs. This technique leverages the inherent variability in LLM responses to filter out anomalous or inconsistent answers, improving stability in high-stakes applications. Through Rust’s llm-chain crate, developers can efficiently implement self-consistency prompting, generating dependable results across diverse contexts. As trends in reinforcement learning and dynamic weighting emerge, self-consistency prompting is set to become even more adaptable, paving the way for robust, accurate AI-driven decision-making. In applications where consistency is paramount, self-consistency prompting offers an advanced tool for building trustworthy, reliable LLM systems.

22.4. Generate Knowledge Prompting

Generate Knowledge Prompting is an advanced prompt engineering technique aimed at eliciting informative, contextually rich, and knowledge-driven responses from large language models (LLMs). Unlike standard prompts that focus on direct answers, generate knowledge prompting encourages the model to draw upon its underlying representations and “generate” knowledge in the form of explanations, insights, or elaborations on specific topics. This approach enhances the model’s utility in applications that require nuanced understanding, such as education, research, and information retrieval, where context-rich responses add significant value to end-users. By designing prompts that guide the model toward deeper, more expansive responses, generate knowledge prompting capitalizes on the model’s ability to synthesize information, improving the quality and relevance of outputs.

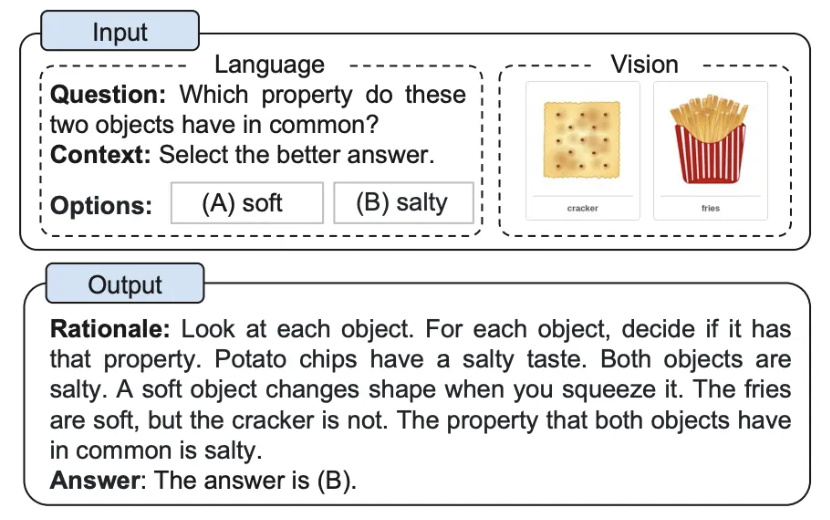

Figure 3: Illustration of Generate Knowledge prompting from https://www.promptingguide.ai.

Mathematically, generate knowledge prompting can be seen as a multi-stage response function, where each generated response $R$ is not merely a direct answer but a collection of knowledge elements $K = \{k_1, k_2, \dots, k_n\}$. Given a prompt $P$ that encourages knowledge generation, the output function $f(P)$ produces a response $R$ where each $k_i$ represents an individual knowledge component, such as a fact, insight, or contextual clarification. This can be represented as:

$$R = f(P) = \sum_{i=1}^{n} k_i$$

where $n$ is the number of distinct knowledge components generated in response to the prompt. By guiding the model to activate its knowledge components in response to prompts, generate knowledge prompting enhances the model’s effectiveness in information-dense tasks, creating responses that are more than simple answers—they are contextually aware explanations.

An effective approach to implementing generate knowledge prompting involves designing prompts that explicitly request background information, reasoning, or detailed explanations. For instance, in an educational application, a prompt might ask the model not only to define a term but also to discuss its historical significance and applications. Such prompts are structured to encourage the model to retrieve and articulate knowledge, fostering a more comprehensive response. Generate knowledge prompting is particularly valuable in applications like tutoring, where understanding the "why" behind an answer enhances the learning experience. It also benefits information retrieval tasks by producing responses that go beyond surface-level information, offering users a more in-depth view of a given topic.

The llm-chain crate in Rust provides a powerful framework for implementing generate knowledge prompting. With llm-chain, prompts can be organized and sequenced to guide the model into producing rich, knowledge-driven responses. The following Rust code demonstrates how to implement generate knowledge prompting by constructing a prompt that encourages the model to elaborate on a topic. In this example, the model is asked not only to define a concept but to discuss its applications and implications, leading to a more informative output.

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use llm_chain::traits::Executor;

use tokio;

// Knowledge Prompt Setup: Encouraging the model to elaborate on a topic in-depth

fn generate_knowledge_prompt() -> Step {

let prompt_content = "Explain the concept of 'ecosystem' in ecology. Discuss its components, functions, and provide examples of different types.";

let template = StringTemplate::from(prompt_content);

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Initialize options with your API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Execute Knowledge Prompting with the generated knowledge prompt

let knowledge_chain = Chain::new(vec![generate_knowledge_prompt()]);

let response = knowledge_chain.run(parameters!(), &exec).await;

match response {

Ok(result) => println!("Knowledge-Driven Response: {}", result.to_string()),

Err(e) => eprintln!("Error generating response: {:?}", e),

}

Ok(())

}

This Rust code uses the llm_chain library to generate a comprehensive knowledge-driven response on the ecological concept of an "ecosystem." The program defines a function, generate_knowledge_prompt, to structure a detailed prompt requesting the model to explain components, functions, and examples of ecosystems. This prompt is created as a StringTemplate, then wrapped in Data::text, and finally encapsulated in a Step for sequential execution. In the asynchronous main function, a ChatGPT executor is initialized with an API key, and the knowledge prompt is added to a Chain, enabling the prompt to run as part of a structured sequence. Using the tokio runtime, the program executes the chain asynchronously and prints the model’s response. This setup promotes depth and thoroughness in the generated response, as the model is guided to provide an elaborative answer. Error handling is also included to manage any issues that arise during execution, ensuring a smooth process.

Generate knowledge prompting has proven especially valuable in scenarios that demand depth and context in responses. In educational applications, for example, generate knowledge prompting allows for explanations that go beyond rote definitions, enriching the learning process with historical context, applications, and cross-disciplinary insights. In customer support, this approach can be used to provide clients with comprehensive answers, explaining both solutions and relevant background information, which fosters a deeper understanding and increases satisfaction. In professional research contexts, knowledge-rich prompts can assist with synthesizing literature or providing quick overviews of complex topics, enabling researchers to access concise yet thorough summaries of key information.

Evaluating the effectiveness of generate knowledge prompting requires both qualitative and quantitative assessment metrics. Qualitatively, human evaluators can assess the coherence, completeness, and depth of the responses, ensuring that generated answers are informative and contextually relevant. Quantitatively, metrics such as response length, topic coverage, and lexical diversity can serve as proxies for measuring the richness of knowledge-based responses. Moreover, user satisfaction metrics, such as user ratings or engagement times, can provide insights into the practical impact of knowledge-rich prompts on end-user experiences.

Industry use cases underscore the transformative potential of generate knowledge prompting. In healthcare, for instance, medical databases have implemented knowledge-rich prompts to assist healthcare professionals in understanding complex conditions by generating summaries of symptoms, treatments, and underlying mechanisms. Similarly, in finance, generate knowledge prompting has been used in risk assessment tools to provide analysts with comprehensive insights into potential risks, including historical data, trends, and market analyses. These applications highlight the versatility of knowledge-driven prompting, demonstrating its value in providing users with meaningful, context-aware information across sectors.

Recent advancements in generate knowledge prompting focus on enhancing the model’s capacity to retrieve domain-specific knowledge and tailor responses based on user intent. One emerging trend is dynamic knowledge prompting, where prompts are adapted based on real-time user interactions or context changes. For example, an educational application might dynamically adjust prompts to align with a student’s learning progress, encouraging the model to elaborate on new topics or provide deeper explanations as needed. Rust’s efficiency and concurrency capabilities are well-suited for implementing dynamic prompting, enabling systems to adapt prompts responsively and in real-time, enhancing the interactivity and relevance of responses.

Another innovation in this area is the integration of external knowledge bases or databases with LLMs, allowing the model to cross-reference its internal representations with verified information sources. This approach ensures that generated knowledge is not only contextually rich but also accurate, providing users with reliable information. Rust’s performance and memory safety features make it an ideal choice for managing the integration of large knowledge databases with LLM pipelines, ensuring that responses are both knowledge-rich and precise.

Ethical considerations play an essential role in generate knowledge prompting, particularly in scenarios where misinformation could have serious consequences. For example, in healthcare or legal advice applications, knowledge-rich prompts must be designed with safeguards to prevent the model from producing inaccurate or misleading information. Developers should implement verification mechanisms to ensure that knowledge-rich responses align with established facts or guidelines. Additionally, transparency around the model’s sources and reasoning process is essential, especially in fields where the origin and reliability of knowledge are critical. By incorporating quality control measures, developers can mitigate risks and ensure that generate knowledge prompting serves as a trustworthy tool across applications.

In conclusion, generate knowledge prompting represents a powerful approach to extracting and presenting informative, contextually rich responses from LLMs. By structuring prompts to encourage knowledge generation, developers can leverage the model’s potential to provide comprehensive, multi-faceted answers that extend beyond simple definitions or direct responses. Rust’s llm-chain crate enables efficient implementation of generate knowledge prompting, providing a structured framework for crafting, managing, and refining knowledge-driven prompts. As trends in dynamic prompting and knowledge base integration continue to evolve, generate knowledge prompting is set to become an indispensable tool in applications that require depth, accuracy, and contextual richness. Whether in education, research, or customer support, generate knowledge prompting enables AI systems to function as reliable sources of insight and information, enhancing user engagement and trust across diverse fields.

22.5. Prompt Chaining

Prompt chaining is an advanced prompt engineering technique that improves the reliability and performance of large language models (LLMs) by breaking complex tasks into a sequence of smaller, interlinked prompts, or “chains.” Instead of presenting a detailed task all at once, prompt chaining allows the model to handle each subtask sequentially, using the response from one prompt as the input for the next. This structured approach preserves context across steps, enabling the model to tackle multi-step problems with clarity and precision. In each stage of the chain, prompts can perform transformations, additional analyses, or data extraction, ultimately building up to the final result. This method not only enhances performance but also boosts transparency, as developers can trace and debug the model’s response at each step.

Prompt chaining is particularly valuable for applications that demand structured analysis, such as document question answering, conversational AI, and complex data extraction tasks. For example, in document QA, the chain might start with a prompt that extracts relevant passages, followed by a second prompt that synthesizes these passages into a cohesive answer. This modular approach not only makes it easier to identify where performance improvements are needed but also increases the controllability and reliability of LLM-driven applications. By managing complex workflows in logical, sequential steps, prompt chaining empowers developers to build more precise, adaptable, and user-focused LLM-powered applications.

In mathematical terms, prompt chaining can be represented as a Markov process, where the state of each prompt $P_n$ depends on the state of the previous prompt $P_{n-1}$ and is used to inform the generation of the next prompt $P_{n+1}$. Let $R_n$ represent the response to prompt $P_n$, such that each prompt-response pair creates a step in a chain that guides the task execution process. The objective is to define a prompt sequence $\{P_1, P_2, \dots, P_n\}$ where each $R_n$ refines or narrows the scope of the task until the final response $R_f$ meets the desired objective. This can be described by the equation:

$$ R_f = f(P_1, R_1, P_2, R_2, \dots, P_n, R_n) $$

where $f$ is the aggregation function that incorporates each response into the final output. This approach ensures that each stage in the sequence is not only relevant to the initial prompt but also contextually aligned with previous stages, allowing the model to approach complex queries in a methodical, organized fashion.

Prompt chaining is especially effective in use cases that require structured analysis or decision-making. In customer service automation, for example, prompt chaining can streamline complex inquiries by dividing them into smaller questions that address different aspects of a customer’s issue, such as account verification, issue type, and potential solutions. In legal document analysis, a chain of prompts can guide the model through stages of extraction, summarization, and risk analysis, ensuring that each step builds on previous findings. This technique is also valuable in creative applications, where generating a complex storyline or structured narrative may benefit from prompts that introduce characters, settings, and plot progression in sequence.

The llm-chain crate in Rust enables prompt chaining by providing a framework for managing prompt sequences, storing responses, and ensuring each prompt is informed by previous outputs. This Rust program demonstrates a sophisticated approach to prompt chaining using the llm_chain library to guide a large language model (LLM) through a multi-step analysis. The task is structured as a series of prompts that gather background information on a company's market performance, identify key issues based on that background, and suggest solutions to those issues. By breaking down the problem into smaller, manageable stages, this code leverages prompt chaining to maintain context across steps, allowing the model to build on its responses progressively.

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use tokio;

use std::error::Error;

// Step 1: Define a background prompt to provide company performance details

fn background_prompt() -> Step {

let prompt_content = "Provide background information on the company’s recent performance in the market.";

let template = StringTemplate::from(prompt_content); // Using &str directly

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

// Step 2: Define an issues prompt to identify challenges based on background information

fn issues_prompt(previous_response: &str) -> Step {

let prompt_content = format!(

"Based on the following background, identify the key issues faced by the company: {}",

previous_response

);

let template = StringTemplate::from(prompt_content.as_str()); // Convert to &str

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

// Step 3: Define a solutions prompt to suggest solutions based on identified issues

fn solution_prompt(previous_response: &str) -> Step {

let prompt_content = format!(

"Considering the identified issues: {}, suggest solutions that could address these challenges.",

previous_response

);

let template = StringTemplate::from(prompt_content.as_str()); // Convert to &str

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn Error>> {

// Initialize options with your API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Step 1: Gather background information

let background_chain = Chain::new(vec![background_prompt()]);

let background = background_chain.run(parameters!(), &exec).await?.to_string();

// Step 2: Identify issues based on background information

let issues_chain = Chain::new(vec![issues_prompt(&background)]);

let issues = issues_chain.run(parameters!(), &exec).await?.to_string();

// Step 3: Propose solutions based on identified issues

let solution_chain = Chain::new(vec![solution_prompt(&issues)]);

let solutions = solution_chain.run(parameters!(), &exec).await?.to_string();

// Final output combining results from all steps

println!("Background: {:?}", background);

println!("Identified Issues: {:?}", issues);

println!("Suggested Solutions: {:?}", solutions);

Ok(())

}

In the code, each step is defined as a function that creates a StringTemplate for a specific prompt and wraps it in Data::text, turning it into a Step compatible with the llm_chain library. The asynchronous main function orchestrates the sequence of these prompts using Chain. First, it runs a background_chain to retrieve market performance details. This response is passed to the issues_chain to identify challenges, and the issues response is subsequently provided to the solution_chain to generate solutions. Using the tokio runtime for asynchronous execution, each chain step is awaited, ensuring ordered and contextually aware responses from the model. This approach showcases how prompt chaining can improve response reliability and transparency, especially for complex multi-step tasks.

Prompt chaining has significant advantages in tasks that require sequential reasoning or multi-step problem-solving. In the healthcare sector, for example, a model could be used to assess patient symptoms, run through potential diagnoses, and suggest next steps or treatments, with each stage refining the information provided in previous steps. This approach can be applied in educational platforms, where a chain of prompts could guide students through problem-solving exercises by first reviewing key concepts, then applying them to examples, and finally encouraging independent solution attempts. The sequential nature of prompt chaining enables these complex interactions to be handled in a way that feels both logical and supportive to the end user.

To evaluate the effectiveness of prompt chaining, developers can use several qualitative and quantitative metrics. Qualitatively, evaluating coherence and relevance at each stage ensures that each prompt-response pair contributes constructively to the overall goal. Quantitatively, metrics such as task completion rate, response accuracy, and time to completion can provide insights into the efficiency and precision of the prompt chain. Additionally, user feedback or performance on structured tasks can offer real-world validation, as prompt chaining often provides a more granular and transparent process for model-driven interactions.

Real-world case studies demonstrate the powerful potential of prompt chaining. In finance, prompt chaining has been used to analyze complex financial transactions, where a sequence of prompts guides the model through stages of transaction extraction, risk assessment, and regulatory compliance checks. Another prominent application is in customer support systems that handle multifaceted issues, where chaining prompts enables the model to systematically diagnose the problem, search for solutions, and communicate a clear resolution plan to customers. The effectiveness of prompt chaining in these cases lies in its ability to maintain focus on specific aspects of a task, enabling deeper and more accurate insights as the chain progresses.

Emerging trends in prompt chaining research are focusing on dynamic and adaptive chaining strategies. One promising approach is the use of reinforcement learning to adjust prompt chains based on real-time feedback, where successful chains are reinforced to improve future performance. This can enable models to dynamically adjust the structure and content of a chain based on the complexity of the user’s input, creating a more tailored experience. Rust’s concurrency capabilities provide an advantage in this context, as it enables efficient handling of these adaptive chains even in high-demand scenarios. Dynamic chaining could also include real-time decision nodes within a chain, allowing models to pivot based on user responses or task progress, a feature well-suited for interactive applications.

Ethical considerations are crucial in prompt chaining, particularly in applications that impact users’ decision-making. Because each step in a chain builds on prior responses, errors or biases can propagate through the sequence, potentially magnifying their impact. For example, in applications such as legal advice or healthcare diagnostics, an initial inaccurate response could mislead subsequent steps, creating compounded inaccuracies. To mitigate these risks, developers should implement checkpoints or verification steps within chains to identify and correct issues before they escalate. Additionally, prompt chains used in sensitive applications should be transparent, allowing users to understand the rationale behind each step and fostering trust in model-driven outcomes.

In conclusion, prompt chaining represents a powerful, structured approach to handling complex tasks in large language models by breaking down interactions into sequential, manageable steps. This technique improves model performance in multi-step reasoning and task execution, enabling applications in fields as diverse as finance, healthcare, and education. Rust’s llm-chain crate provides an efficient foundation for implementing prompt chaining, supporting dynamic management of prompt sequences to ensure logical and coherent task progression. With advancements in dynamic chaining and adaptive reinforcement learning, prompt chaining is poised to become an essential tool in developing responsive, context-aware AI systems. This technique not only enhances the clarity and reliability of model outputs but also brings a level of transparency and control to complex interactions, making AI-driven solutions more accessible and effective for a wide range of applications.

22.6. Tree of Thoughts Prompting

Tree of Thoughts (ToT) is an advanced framework in prompt engineering, recently introduced by Yao et al. (2023) and Long (2023), to enhance language models (LLMs) in tackling complex tasks requiring strategic exploration and lookahead. Traditional prompt engineering methods, including chain-of-thought prompting, often fall short in tasks where exploring alternative reasoning paths or iterating through multi-step solutions is necessary. ToT addresses this by organizing the reasoning process into a tree structure, where each "thought" represents an intermediate step or sub-solution. This setup allows the model to generate, evaluate, and build upon thoughts through deliberate, systematic exploration, using search algorithms such as breadth-first search (BFS), depth-first search (DFS), or beam search to evaluate different thought paths.

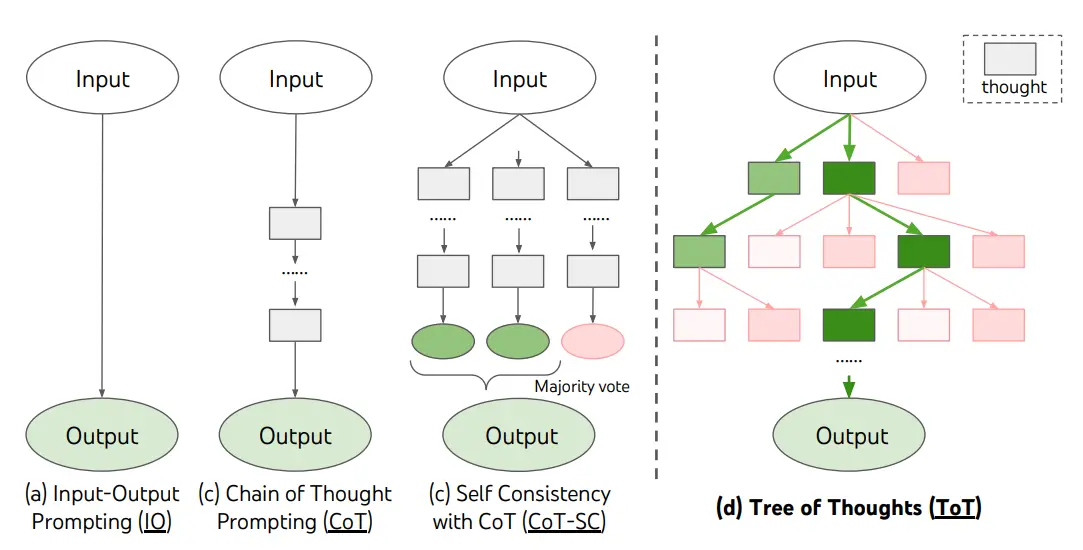

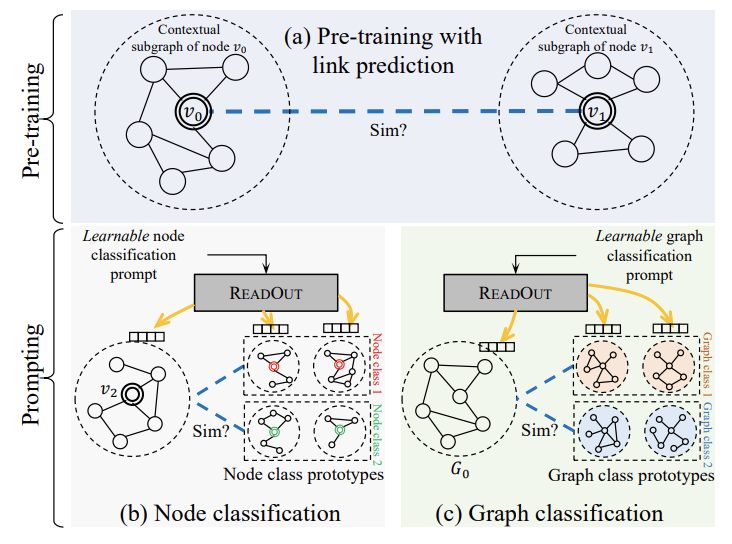

Figure 4: Illustration of CoT, CoT-SC and ToT from https://www.promptingguide.ai.

ToT’s approach involves specifying the number of candidate thoughts and steps at each decision point, enabling iterative refinement toward the solution. For example, in mathematical reasoning tasks like the Game of 24, the model decomposes the problem into multiple steps, keeping only the top candidates at each level of reasoning based on evaluations like "sure," "maybe," or "impossible." This process enables the model to narrow down on promising paths while discarding implausible ones early, guided by commonsense evaluations such as "too big/small." Advanced variations of ToT, such as those proposed by Long (2023), include reinforcement learning-driven ToT Controllers that adapt search strategies through self-learning, enhancing problem-solving capabilities beyond generic search techniques.

Recent adaptations, such as Hulbert's Tree-of-Thought Prompting, apply ToT principles in simpler prompt setups by having the LLM generate multiple intermediate steps within a single prompt. This version prompts the model to emulate a panel of "experts" who explore various reasoning paths collectively, allowing it to self-correct along the way. Furthermore, Sun (2023) has expanded on this idea with PanelGPT, benchmarking ToT prompting through large-scale experiments and introducing discussion-based approaches for generating balanced solutions. Overall, Tree of Thoughts represents a significant leap forward in enhancing the decision-making and problem-solving capabilities of LLMs, enabling them to systematically evaluate and refine complex tasks through multiple paths of reasoning.

Mathematically, Tree of Thoughts prompting can be modeled as a search over a tree structure, where each node represents a state or partial solution in the thought process, and each edge represents a step in reasoning from one state to another. Let $T(P)$ denote a tree structure rooted at the initial prompt $P$. The model generates multiple branches at each node $N$, with each branch $b_i$ representing a different approach or intermediate solution. The goal is to explore paths $\{b_1, b_2, \dots, b_k\}$ and evaluate the outcome of each path based on a scoring or selection function $f(b)$, ultimately selecting the path with the highest score. The overall decision-making process can be formalized as:

$$ \text{Best Path} = \operatorname{arg\,max}_{b \in T(P)} f(b) $$

where $f(b)$ is a function that evaluates the quality or effectiveness of each thought path $b$. This structured exploration allows the model to make more informed decisions, considering various perspectives and approaches before arriving at a final answer.

Tree of Thoughts prompting is particularly useful in applications where decisions require multi-step reasoning and benefit from evaluating diverse perspectives. For instance, in financial planning, generating multiple investment strategies and assessing each for risk and return allows the model to recommend the best approach tailored to specific goals. In healthcare, exploring multiple diagnostic paths based on patient symptoms and medical history enables the model to consider differential diagnoses before suggesting a final recommendation. This technique is also valuable in creative fields, such as narrative generation, where multiple story arcs can be generated and evaluated to select the most engaging storyline.

Implementing Tree of Thoughts prompting in Rust is made possible through the llm-chain crate, which supports branching and chaining of prompts. Using llm-chain, developers can construct a tree structure where each node represents a prompt, and each branch represents a different thought path. This Rust program demonstrates an advanced implementation of the Tree of Thoughts (ToT) framework using the llm_chain library to guide a large language model (LLM) through multiple reasoning paths for a complex task. The task involves generating and evaluating multiple approaches to improve user engagement on a social media platform. By prompting the LLM to suggest different strategies and scoring each response, the program helps the model identify the most optimal solution. This approach enables systematic exploration and selection of the best thought path, enhancing the model's ability to solve multi-step, nuanced problems.

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use tokio;

use std::collections::HashMap;

// Function to create the root prompt for generating initial thoughts

fn root_prompt() -> Step {

let prompt_content = "Suggest approaches for increasing user engagement on a social media platform.";

let template = StringTemplate::from(prompt_content);

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

}

// Function to generate multiple thought paths asynchronously

async fn generate_thought_paths(exec: &impl llm_chain::traits::Executor, prompt: &Step, num_paths: usize) -> Vec<String> {

let mut responses = Vec::new();

for _ in 0..num_paths {

let chain = Chain::new(vec![prompt.clone()]);

if let Ok(response) = chain.run(parameters!(), exec).await {

responses.push(response.to_string());

}

}

responses

}

// Custom scoring function to evaluate path quality based on defined criteria

fn evaluate_path_quality(path: &str) -> i32 {

// Example scoring logic: score based on length and keyword presence

let score = path.len() as i32;

let engagement_keywords = ["engagement", "retention", "growth"];

for keyword in &engagement_keywords {

if path.contains(keyword) {

return score + 10; // Higher score if specific keywords are found

}

}

score

}

// Function to evaluate and select the best thought path based on scoring

fn evaluate_paths(paths: Vec<String>) -> Option<String> {

let mut scores = HashMap::new();

for path in paths {

let score = evaluate_path_quality(&path);

scores.insert(path.clone(), score);

}

scores.into_iter().max_by_key(|&(_, score)| score).map(|(path, _)| path)

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Initialize options with your API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Generate the root prompt

let prompt = root_prompt();

// Generate and evaluate multiple thought paths

let paths = generate_thought_paths(&exec, &prompt, 3).await;

if let Some(best_path) = evaluate_paths(paths) {

println!("Best Thought Path: {}", best_path);

} else {

println!("No optimal path found.");

}

Ok(())

}

In this code, a root prompt is first set up to request engagement strategies. Using the generate_thought_paths function, the program creates three potential responses (thought paths) by running the root prompt multiple times with asynchronous execution, leveraging the tokio runtime for efficiency. Each response is scored based on criteria set in the evaluate_path_quality function, where certain keywords relevant to engagement boost the path’s score. The evaluate_paths function then selects the response with the highest score, representing the best thought path. This setup allows the LLM to explore diverse solutions in a structured manner, facilitating better decision-making for tasks with multiple solution paths.

Tree of Thoughts prompting offers significant advantages in domains where decisions require careful consideration of multiple factors. For example, in business strategy, generating alternative business plans and evaluating their feasibility allows for informed decision-making that weighs potential risks and returns. In educational tools, this approach enables the generation of alternative learning paths for students, evaluating each based on factors like difficulty and relevance to student goals. By guiding the model through a structured exploration of possibilities, Tree of Thoughts prompting facilitates decisions that are well-rounded, comprehensive, and tailored to complex scenarios.

To assess the effectiveness of Tree of Thoughts prompting, developers can employ both quantitative and qualitative evaluation techniques. Quantitative evaluation might involve scoring the selected path based on predefined criteria such as accuracy, relevance, or solution quality. Qualitative methods, including user feedback or expert review, can be used to assess whether the selected thought path aligns with domain-specific best practices or expectations. Additionally, metrics like completion time and consistency across multiple prompt chains provide insights into the efficiency and reliability of the Tree of Thoughts approach, helping developers refine the model’s exploration and selection processes.

Several real-world case studies highlight the impact of Tree of Thoughts prompting in enhancing decision-making. For example, in recommendation systems, Tree of Thoughts prompting enables the model to generate and evaluate various recommendation pathways based on user preferences and historical behavior. In risk management, this technique allows models to explore and rank mitigation strategies based on potential impact and cost-effectiveness, providing organizations with a robust decision framework. Such applications demonstrate how Tree of Thoughts prompting can improve outcomes by introducing a structured, evaluative approach to complex problem-solving.

Recent trends in Tree of Thoughts prompting are focused on integrating reinforcement learning to optimize path selection dynamically. By using reinforcement signals, models can learn to prioritize certain paths based on historical performance, adjusting their exploration strategies in real-time. Additionally, dynamic Tree of Thoughts prompting, where paths are generated and evaluated interactively based on user input, offers promising applications in fields like personalized tutoring or interactive storytelling. Rust’s concurrency features are particularly advantageous in these interactive setups, enabling rapid evaluation of paths and adaptive adjustment of the tree structure based on user responses or model feedback.

Ethical considerations are essential in the implementation of Tree of Thoughts prompting, especially in applications that impact user choices or behavior. For example, in recommendation systems, selecting paths based solely on engagement metrics could inadvertently reinforce addictive behavior or skewed content consumption. To mitigate these risks, developers should design scoring functions that balance user engagement with factors such as informational value or user well-being. Additionally, transparency mechanisms that reveal the model’s thought paths or decision rationale can help users understand the reasoning behind specific recommendations, fostering trust and accountability.

In conclusion, Tree of Thoughts prompting offers a robust framework for enhancing the decision-making capabilities of large language models. By generating and evaluating multiple thought paths, this technique enables models to explore complex decision spaces and arrive at well-reasoned conclusions, making it invaluable for applications that require structured reasoning and multi-step problem-solving. Rust’s llm-chain crate provides a suitable foundation for implementing this technique, supporting the creation, management, and evaluation of multi-path prompt structures. As trends in reinforcement learning and adaptive path selection continue to evolve, Tree of Thoughts prompting is set to become an increasingly valuable tool in developing models that are both insightful and reliable in their reasoning processes. This technique not only enhances model accuracy and relevance but also brings a level of rigor and transparency to AI-driven decision-making, empowering users with AI solutions that are both informative and ethically responsible.

22.7. Automatic Prompt Engineer

Automatic Prompt Engineering (APE) marks a groundbreaking advancement in prompt design for large language models (LLMs), transforming what was once a manual, iterative process into an automated, algorithm-driven approach. Traditionally, prompt engineering has required human expertise to craft and refine prompts through trial and error, often demanding deep knowledge and time-consuming experimentation. APE aims to eliminate this dependency by automating the creation, evaluation, and optimization of prompts, using sophisticated algorithms that generate and test prompts with minimal human intervention. By incorporating optimization techniques, heuristic evaluations, and black-box search strategies, APE significantly accelerates prompt fine-tuning, ensuring high-quality outputs while streamlining LLM deployment across diverse applications. The overarching goal of APE is to reduce both the time and expertise needed to develop effective prompts, making high-performance prompting more accessible and efficient.

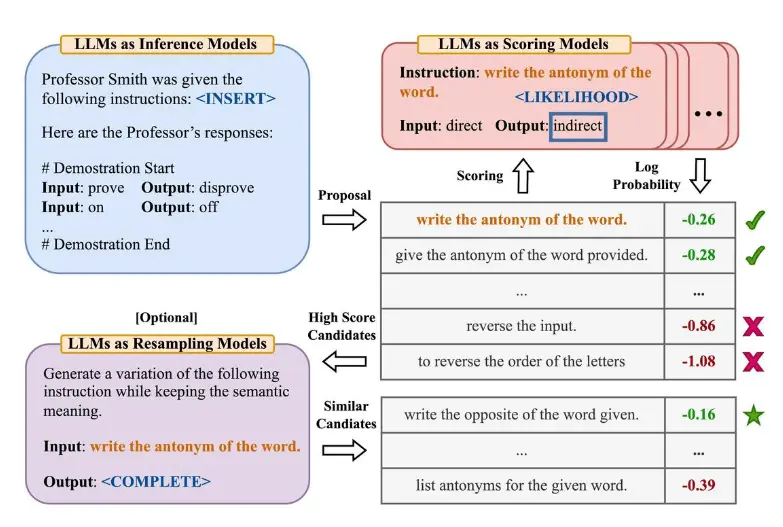

Figure 5: Automatic Prompt Engineer prompting from https://www.promptingguide.ai.

In their 2022 study, Zhou et al. introduced the APE framework, which reframes prompt engineering as a problem of natural language synthesis and search. In this framework, LLMs generate a range of instruction candidates based on task-specific output demonstrations. These instructions undergo evaluation within a target model, with the most effective instruction selected based on calculated scores, guiding the search toward high-performing prompts. Notably, APE has demonstrated the potential to surpass manually crafted prompts, discovering zero-shot Chain-of-Thought (CoT) prompts that outperform the widely used "Let's think step by step" directive from Kojima et al. (2022). For example, the APE-generated prompt, "Let's work this out in a step by step way to be sure we have the right answer," improved accuracy in benchmarks like MultiArith and GSM8K. Key studies exploring prompt optimization further include Prompt-OIRL, which uses inverse reinforcement learning for query-dependent prompts; OPRO, where simple phrases like "Take a deep breath" have shown marked improvements in mathematical problem-solving; and techniques like AutoPrompt and Prompt Tuning, which automate prompt creation via gradient-guided search and backpropagation. Collectively, these advancements push the boundaries of LLM capabilities, setting a new standard for adaptable, high-efficiency prompt engineering.Mathematically, APE can be conceptualized as an optimization problem.

Let $P$ represent the space of possible prompts, and let $f(P)$ denote a function that evaluates the effectiveness of each prompt $P$. The goal of APE is to identify an optimal prompt $P^*$ that maximizes $f(P)$ based on evaluation metrics such as relevance, coherence, and task accuracy. Formally, this can be represented as:

$$P^* = \operatorname{arg\,max}_{P \in P} f(P)$$

This optimization may be achieved through various techniques, such as genetic algorithms, reinforcement learning, or gradient-based methods, where each iteration involves generating candidate prompts, evaluating them, and retaining those that best align with the target objective.

Automatic prompt generation is particularly valuable in dynamic environments, such as customer service and financial analysis, where LLM requirements frequently change. For instance, in customer service, a company may need prompts that are effective across different customer concerns and product lines. APE can quickly generate prompts tailored to these diverse contexts by autonomously exploring prompt variations. In e-commerce applications, where consumer demands and product categories evolve continuously, APE can adapt prompts in real-time to reflect these shifts, enabling the LLM to stay relevant and accurate.

Implementing Automatic Prompt Engineering in Rust can be effectively managed with the llm-chain crate, which provides robust support for managing and iterating on prompts. By using Rust’s performance and concurrency features, developers can create a pipeline that rapidly generates, tests, and refines prompts. The following example demonstrates how to set up an APE pipeline in Rust using llm-chain, where candidate prompts are generated, evaluated, and optimized over multiple iterations.

use llm_chain::{chains::sequential::Chain, executor, options, parameters, step::Step};

use llm_chain::prompt::{Data, StringTemplate};

use tokio;

use std::error::Error;

// Function to generate candidate prompts

fn generate_candidates(base_prompt: &str, variations: Vec<&str>) -> Vec<Step> {

variations

.into_iter()

.map(|v| {

let prompt_content = format!("{} {}", base_prompt, v);

let template = StringTemplate::from(prompt_content.as_str());

let prompt = Data::text(template);

Step::for_prompt_template(prompt)

})

.collect()

}

// Function to evaluate prompt effectiveness (simple scoring logic based on response length)

async fn evaluate_prompt(exec: &impl llm_chain::traits::Executor, prompt: &Step) -> f32 {

let chain = Chain::new(vec![prompt.clone()]);

if let Ok(response) = chain.run(parameters!(), exec).await {

response.to_string().len() as f32 // Score based on response length

} else {

0.0 // Default score if evaluation fails

}

}

// Optimization loop for selecting the best prompt

async fn optimize_prompt(exec: &impl llm_chain::traits::Executor, base_prompt: &str, variations: Vec<&str>, iterations: usize) -> Step {

let mut best_prompt = Step::for_prompt_template(Data::text(StringTemplate::from(base_prompt)));

let mut best_score = 0.0;

for _ in 0..iterations {

let candidates = generate_candidates(base_prompt, variations.clone());

for candidate in candidates {

let score = evaluate_prompt(exec, &candidate).await;

if score > best_score {

best_score = score;

best_prompt = candidate.clone();

}

}

}

best_prompt

}

#[tokio::main(flavor = "current_thread")]

async fn main() -> Result<(), Box<dyn Error>> {

// Initialize options with your API key

let options = options! {

ApiKey: "sk-proj-..." // Replace with your actual API key

};

// Create a ChatGPT executor

let exec = executor!(chatgpt, options)?;

// Define the base prompt and variations

let base_prompt = "Analyze the current trends in social media engagement";

let variations = vec![

"with a focus on user growth",

"considering demographic data",

"including recent technological advances",

];

// Optimize the prompt

let optimized_prompt = optimize_prompt(&exec, base_prompt, variations, 5).await;

println!("Optimized Prompt: {:?}", optimized_prompt);

Ok(())

}

In this code, generate_candidates produces prompt variations by appending different phrases to the base prompt. Each candidate prompt is then evaluated in the evaluate_prompt function, which scores it based on the length of the generated response—a simple proxy for relevance and richness of the response, though more complex scoring functions could be implemented. The optimize_prompt function iteratively refines the prompts, selecting the one with the highest score as the most effective prompt for the given task.

Automatic Prompt Engineering has significant benefits across industries by reducing dependency on human intervention and enabling rapid adaptation to new contexts. In healthcare, for example, where medical terminology and protocols frequently evolve, APE allows models to update prompts without manual redesign, ensuring that responses remain clinically accurate and relevant. In content generation, APE can enhance creative applications by automatically generating diverse prompts that encourage unique outputs, such as varied narratives or stylistic choices in creative writing.

Evaluating the effectiveness of APE-generated prompts can be approached using both quantitative and qualitative metrics. Quantitative measures might include output coherence, relevance, and completion rate. Qualitatively, expert reviews and user satisfaction surveys provide insights into the practicality and applicability of the generated prompts in real-world scenarios. Further, APE pipelines can benefit from reinforcement learning, where successful prompts are reinforced and unsuccessful ones are adjusted or discarded based on feedback, creating a self-improving system that continuously refines its prompt generation strategy.