Chapter 10

Open Foundational LLMs

"The future of AI lies in the open-source movement, where foundational models can be adapted and improved by the community, driving innovation and ensuring that the benefits of AI are accessible to all." — Yann LeCun

Chapter 10 of LMVR offers an in-depth exploration of building, fine-tuning, and deploying open foundational language models (LLMs) using Rust and the HuggingFace Candle crate. The chapter begins by introducing the significance of open foundational LLMs and the advantages of leveraging open-source models in NLP. It then provides a detailed guide to setting up a Rust environment tailored for LLM development, including loading and fine-tuning pre-trained models for specific tasks. The chapter also covers the deployment of LLMs, discussing strategies for optimizing scalability, latency, and resource efficiency, and exploring customization techniques to extend model capabilities. Finally, it addresses the challenges and future directions in the development of LLMs, emphasizing the role of Rust in pushing the boundaries of what these models can achieve.

10.1. Introduction to Foundational LLMs

The emergence of foundational language models (LLMs) has revolutionized NLP by enabling models trained on extensive datasets to capture intricate linguistic patterns and contextual dependencies. Models like GPT and BERT, designed with general-purpose architectures, excel across various NLP tasks, from sentiment analysis to question-answering. Their versatility allows them to be fine-tuned for specific tasks with minimal data, making them a cornerstone of recent NLP advancements. Hugging Face's open-source foundational models further democratize AI by providing transparent, modifiable architectures that encourage widespread experimentation and innovation. Researchers, startups, and enterprises can customize these robust models to meet specific needs without relying on proprietary, resource-intensive systems.

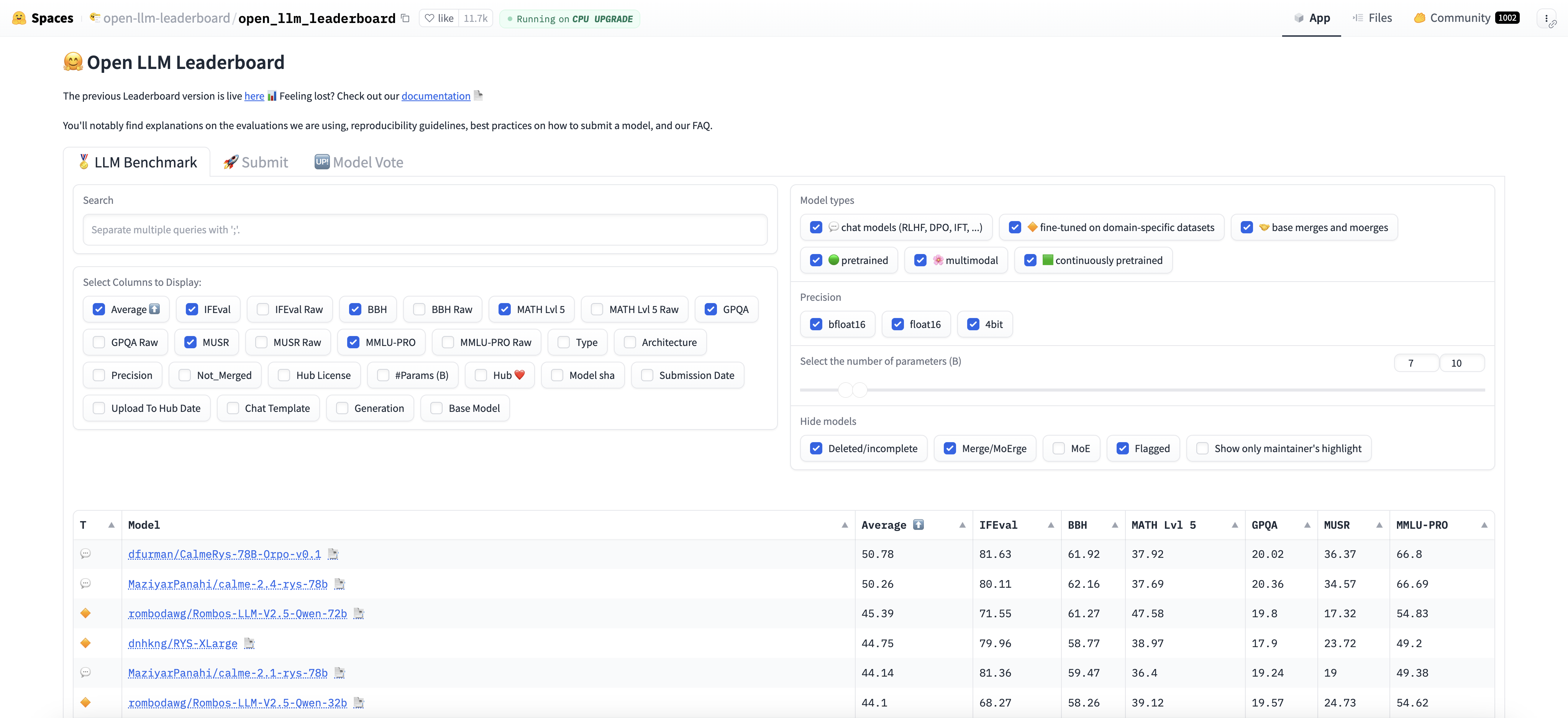

Open-source foundational models foster accessibility and flexibility, allowing developers from all sectors—including small-scale innovators—to adapt high-performance models to their unique applications, whether in healthcare, finance, or media. Hugging Face’s Open Leaderboard for LLMs enriches this ecosystem by making these models openly available, promoting transparency, and encouraging diverse contributions within the AI community. The adaptability of open-source LLMs empowers developers to build domain-specific solutions cost-effectively, advancing AI-driven innovation in specialized fields without dependency on closed, costly systems.

Figure 1: Open LLM Leaderboard from Hugging Face.

A key distinction in the AI ecosystem is between foundational and task-specific models. Foundational models act as generalized, pre-trained architectures that capture language patterns, whereas task-specific models optimize these foundational capabilities for particular applications. The transparency and reproducibility of open-source models allow researchers to understand, validate, and enhance their structures, ensuring robust performance across a wide range of applications. However, the open-source nature of foundational models also introduces ethical considerations. Since these models are often trained on public data, biases in the training datasets can propagate through the model, leading to skewed or unintended outputs. Furthermore, the extensive data required to train such models raises privacy concerns, as models might inadvertently learn sensitive information. Addressing these challenges necessitates active efforts within the open-source community to debias models and safeguard user privacy, promoting responsible AI development.

With Rust's efficient, low-level programming capabilities, implementing a foundational LLM pipeline becomes a powerful yet performance-conscious task. Candle, a Rust library for deep learning, offers a robust API for loading and interacting with foundational models from HuggingFace's model zoo. The following example demonstrates an advanced setup for implementing an LLM pipeline using the Candle crate, showcasing model loading, tokenization, and inference.

Setting up the environment begins with loading a foundational model such as GPT-2 and implementing a basic inference pipeline. This pipeline will handle model loading, tokenization, and text generation, allowing us to experiment with input-output dynamics. This Rust program uses the tokenizers and reqwest libraries to download a GPT-2 tokenizer from Hugging Face if it doesn’t already exist locally, then tokenizes and decodes an input text prompt. It checks for the existence of a tokenizer.json file within a specified directory (models/gpt2-tokenizer), creating the directory if needed and downloading the file if it is missing. The program then tokenizes a given input text prompt, converts the tokenized output back into text, and displays it.

[dependencies]

anyhow = "1.0.90"

candle-core = "0.7.2"

candle-examples = "0.7.2"

candle-nn = "0.7.2"

candle-transformers = "0.7.2"

tokenizers = "0.20.1"

reqwest = { version = "0.11", features = ["blocking"] }

use tokenizers::tokenizer::Tokenizer;

use std::error::Error;

use std::fs;

use std::io::Write;

use std::path::Path;

use reqwest::blocking::get;

fn main() -> std::result::Result<(), Box<dyn Error + Send + Sync>> {

// Define the path to the tokenizer directory and file

let tokenizer_dir = Path::new("models/gpt2-tokenizer");

let tokenizer_path = tokenizer_dir.join("tokenizer.json");

// Step 1: Check if the directory exists; if not, create it.

if !tokenizer_dir.exists() {

fs::create_dir_all(&tokenizer_dir)?;

println!("Directory created at: {:?}", tokenizer_dir);

}

// Step 2: Check if the tokenizer.json file exists; if not, download it.

if !tokenizer_path.exists() {

println!("Downloading tokenizer.json...");

// URL of the tokenizer.json file (GPT-2 tokenizer on Hugging Face)

let url = "https://huggingface.co/gpt2/resolve/main/tokenizer.json";

// Download the file

let response = get(url)?;

if response.status().is_success() {

let mut file = fs::File::create(&tokenizer_path)?;

file.write_all(&response.bytes()?)?;

println!("Downloaded tokenizer.json to {:?}", tokenizer_path);

} else {

println!("Failed to download tokenizer.json. Please check the URL or your internet connection.");

return Ok(());

}

}

// Step 3: Load the tokenizer using the tokenizers crate

let tokenizer = Tokenizer::from_file(&tokenizer_path.to_str().unwrap())?;

// Step 4: Define the input prompt and tokenize it.

let input_text = "The impact of open-source models on modern AI is";

let encoding = tokenizer.encode(input_text, true)?;

// Step 5: Decode the tokens back into readable text.

let generated_text = encoding.get_ids()

.iter()

.map(|id| tokenizer.id_to_token(*id).unwrap_or("[UNK]".to_string())) // Convert "[UNK]" to String

.collect::<Vec<_>>()

.join(" ");

println!("Generated Text (tokenized and decoded): {}", generated_text);

Ok(())

}

In this example, we begin by loading a foundational model, specifically GPT-2, with load_model. The Tokenizer is initialized, using a compatible pre-trained tokenizer to prepare input text for the model. Tokenization is essential for converting natural language input into tokenized representations that the model can process. The input prompt is tokenized with encode, allowing the model to work with a sequence of integer tokens. Using the TextGenerator, the model processes the input tokens to generate a coherent continuation, showcasing its language generation abilities. Finally, the generated tokens are decoded back into readable text with decode.

Moving into advanced inference, foundational models can be extended to tackle varied NLP tasks such as text classification and named entity recognition. Expanding the pipeline provides a broader exploration of the model’s capacities and enables developers to assess performance across diverse applications. This Rust program sets up a basic framework for performing text classification and Named Entity Recognition (NER) using tokenized input text. It downloads and initializes a GPT-2 tokenizer if it doesn’t exist locally, then uses it to tokenize input sentences for classification and NER tasks. Although placeholder functions are currently used to simulate these NLP tasks, the structure is ready for integration with a real model capable of handling token classification and sequence classification.

use tokenizers::tokenizer::Tokenizer;

use std::error::Error;

use std::fs;

use std::io::Write;

use std::path::Path;

use reqwest::blocking::get;

// Placeholder function for text classification

fn classify_text(_tokens: Vec<u32>) -> String {

// In a real application, pass tokens to a loaded model and interpret the output.

// Here, we return a dummy classification result.

"Positive".to_string()

}

// Placeholder function for Named Entity Recognition (NER)

fn recognize_entities(_tokens: Vec<u32>) -> Vec<(String, String)> {

// In a real application, pass tokens to a loaded model and interpret the output.

// Here, we return dummy entities.

vec![

("Elon Musk".to_string(), "PERSON".to_string()),

("Tesla".to_string(), "ORG".to_string()),

("California".to_string(), "LOCATION".to_string())

]

}

fn main() -> std::result::Result<(), Box<dyn Error + Send + Sync>> {

// Define the path to the tokenizer directory and file

let tokenizer_dir = Path::new("models/gpt2-tokenizer");

let tokenizer_path = tokenizer_dir.join("tokenizer.json");

// Step 1: Check if the directory exists; if not, create it.

if !tokenizer_dir.exists() {

fs::create_dir_all(&tokenizer_dir)?;

println!("Directory created at: {:?}", tokenizer_dir);

}

// Step 2: Check if the tokenizer.json file exists; if not, download it.

if !tokenizer_path.exists() {

println!("Downloading tokenizer.json...");

// URL of the tokenizer.json file (GPT-2 tokenizer on Hugging Face)

let url = "https://huggingface.co/gpt2/resolve/main/tokenizer.json";

// Download the file

let response = get(url)?;

if response.status().is_success() {

let mut file = fs::File::create(&tokenizer_path)?;

file.write_all(&response.bytes()?)?;

println!("Downloaded tokenizer.json to {:?}", tokenizer_path);

} else {

println!("Failed to download tokenizer.json. Please check the URL or your internet connection.");

return Ok(());

}

}

// Step 3: Load the tokenizer using the tokenizers crate

let tokenizer = Tokenizer::from_file(&tokenizer_path.to_str().unwrap())?;

// Task 1: Text Classification

let classification_input = "This new technology is groundbreaking";

let classification_encoding = tokenizer.encode(classification_input, true)?;

let classification_tokens = classification_encoding.get_ids().to_vec();

let classification_result = classify_text(classification_tokens);

println!("Text Classification Result: {}", classification_result);

// Task 2: Named Entity Recognition (NER)

let ner_input = "Elon Musk unveiled a new Tesla model in California";

let ner_encoding = tokenizer.encode(ner_input, true)?;

let ner_tokens = ner_encoding.get_ids().to_vec();

let ner_result = recognize_entities(ner_tokens);

println!("Named Entities:");

for (entity, label) in ner_result {

println!("Entity: {}, Label: {}", entity, label);

}

Ok(())

}

The code begins by defining the path to the tokenizer file and downloading it from Hugging Face if necessary, ensuring that the tokenizer is available for further processing. In the classify_text and recognize_entities functions, the tokenized inputs are simulated as producing dummy results—"Positive" for text classification and named entities like "Elon Musk" and "Tesla" with labels for NER. These functions currently ignore the actual tokens, as they are placeholders, but the structure allows easy replacement with model inference functions. The final output demonstrates how the program would display classification and NER results, making it a foundational setup for adding real model-based NLP capabilities.

Open foundational models are highly adaptable and find applications across various industries. In customer service, chatbots utilize foundational models like GPT to engage in conversational tasks, providing interactive, human-like responses to users. In the healthcare sector, BERT-based models enhance information retrieval, enabling medical professionals to quickly find relevant clinical documents. In finance, foundational models are used for sentiment analysis to gauge public opinion and detect trends based on real-time news.

Recent trends in foundational LLMs emphasize optimization techniques, like quantization and model distillation, to make models smaller and more efficient for deployment in real-world environments. Moreover, cross-model techniques integrate LLMs with multimodal inputs, combining text with images or audio to create more comprehensive and versatile AI applications. Rust, through Candle, provides a streamlined, efficient environment to experiment with foundational models and innovations in AI, merging performance with flexibility.

The Candle library in Rust offers a robust platform to explore the inner workings and capabilities of foundational LLMs. Implementing an experimental pipeline such as the ones shown here enables developers to unlock the potential of open foundational models and apply them across a range of domains, creating value in specific industry contexts. Through active engagement with these open-source models, developers not only drive innovation but also contribute to responsible AI practices and sustainable, community-driven development.

10.2. Setting Up Environment for Candle

Setting up a Rust environment tailored to language model (LLM) development enables developers to leverage Rust’s system-level control and memory safety features for machine learning tasks. The Rust ecosystem has evolved to include specialized crates, such as HuggingFace Candle, which facilitate building, training, and deploying LLMs with Rust. HuggingFace Candle is particularly significant as it provides a high-performance library optimized for model inference and experimentation with foundational models. Setting up this environment involves configuring dependencies, managing package versions, and creating a workspace optimized for LLM development. A streamlined environment is essential to navigate Rust’s strict compiler rules and its unique approach to memory and concurrency, both crucial in handling the large data processing demands of machine learning. Proper configuration not only prevents common errors but also maximizes performance during both development and deployment.

Rust’s advantages in LLM development stem from its strong emphasis on memory safety and concurrency. Rust’s ownership model, alongside its compiler-enforced safety checks, minimizes the risk of memory-related issues like buffer overflows, which can be critical when managing large model parameters and data batches. In comparison with languages like Python, Rust provides a safer environment at the cost of a steeper learning curve but offers significant performance advantages. By selecting and integrating the right libraries and tools within Rust’s ecosystem, developers can streamline LLM workflows and build pipelines that achieve optimal efficiency. Rust’s interoperability with other languages, particularly Python, allows developers to integrate with Python’s popular machine learning libraries, such as TensorFlow and PyTorch, while leveraging Rust’s performance in the more computationally demanding portions of LLM development. This hybrid approach combines Python's extensive deep learning resources with Rust’s system-level capabilities, creating an environment well-suited for high-performance, large-scale LLM applications.

The practical setup of Rust for LLMs begins with installing Rust itself and managing dependencies through cargo, Rust’s build and package manager. To get started with HuggingFace Candle, developers install the crate using cargo add candle. For example, running the following command initiates a new Rust project and includes Candle as a dependency:

cargo new llm_project

cd llm_project

cargo add candle

Once Candle is installed, setting up basic examples provides familiarity with both Rust syntax and Candle’s API, which is tailored for loading and interacting with pre-trained models. Below is an advanced example that loads a foundational model and performs inference, providing insights into Rust’s syntax and Candle’s functional flow.

[dependencies]

anyhow = "1.0.90"

candle-core = "0.7.2"

candle-examples = "0.7.2"

candle-nn = "0.7.2"

candle-transformers = "0.7.2"

tokenizers = "0.20.1"

reqwest = { version = "0.11", features = ["blocking"] }

use tokenizers::tokenizer::Tokenizer;

use std::error::Error;

use std::fs;

use std::io::Write;

use std::path::Path;

use reqwest::blocking::get;

fn main() -> std::result::Result<(), Box<dyn Error + Send + Sync>> {

// Define the path to the tokenizer directory and file

let tokenizer_dir = Path::new("models/gpt2-tokenizer");

let tokenizer_path = tokenizer_dir.join("tokenizer.json");

// Step 1: Check if the directory exists; if not, create it.

if !tokenizer_dir.exists() {

fs::create_dir_all(&tokenizer_dir)?;

println!("Directory created at: {:?}", tokenizer_dir);

}

// Step 2: Check if the tokenizer.json file exists; if not, download it.

if !tokenizer_path.exists() {

println!("Downloading tokenizer.json...");

// URL of the tokenizer.json file (GPT-2 tokenizer on Hugging Face)

let url = "https://huggingface.co/gpt2/resolve/main/tokenizer.json";

// Download the file

let response = get(url)?;

if response.status().is_success() {

let mut file = fs::File::create(&tokenizer_path)?;

file.write_all(&response.bytes()?)?;

println!("Downloaded tokenizer.json to {:?}", tokenizer_path);

} else {

println!("Failed to download tokenizer.json. Please check the URL or your internet connection.");

return Ok(());

}

}

// Step 3: Load the tokenizer using the tokenizers crate

let tokenizer = Tokenizer::from_file(&tokenizer_path.to_str().unwrap())?;

// Step 4: Define an input prompt and tokenize it.

let input_text = "Exploring the power of Rust in machine learning.";

let encoding = tokenizer.encode(input_text, true)?;

let input_tokens = encoding.get_ids();

// Step 5: Simulate text generation by creating placeholder output tokens.

// In a real application, these would be generated by a model.

let output_tokens = input_tokens.iter().map(|&id| id + 1).collect::<Vec<_>>(); // Simulated output

// Step 6: Decode the generated tokens back into readable text.

let generated_text = output_tokens

.iter()

.map(|id| tokenizer.id_to_token(*id).unwrap_or("[UNK]".to_string())) // Convert "[UNK]" to String

.collect::<Vec<_>>()

.join(" ");

println!("Generated Text: {}", generated_text);

Ok(())

}

In more detail, the code first checks if the necessary tokenizer files exist and, if not, downloads them to a specified directory. After loading the tokenizer, it tokenizes a sample input sentence into IDs. Since the code does not include an actual language model for text generation, it simulates generated tokens by incrementing each token ID, mimicking the token output that a model might produce. Finally, these simulated tokens are decoded back into text, and the resulting output is printed. This structure allows for easy integration with real model-based generation when available, as it demonstrates tokenization, generation, and decoding steps.

Troubleshooting common setup issues is an important part of configuring a robust Rust environment for machine learning. Given Rust’s strict compiler, errors can often arise from incorrect ownership of data structures or type mismatches, especially when dealing with model parameters and data tokens. Optimizing the environment for performance, particularly in large-scale model training, requires attention to compiler optimizations and possibly leveraging tokio or async-std for asynchronous, multi-threaded data loading. HuggingFace Candle supports several optimization techniques, including CPU acceleration and model quantization, which allow models to run efficiently on limited hardware. Additionally, Rust’s ndarray crate can help handle multidimensional arrays, a common requirement in machine learning. Using Rust’s low-level control over memory layout, large models can be loaded and managed without significant overhead, which is essential for deploying models in resource-constrained environments.

In the industry, this Rust-based setup is increasingly applied in areas that demand both high performance and memory safety, such as finance, healthcare, and autonomous systems. For example, in finance, LLMs built in Rust can perform risk analysis by processing large text data with increased reliability and speed. In healthcare, the precision and safety Rust offers are beneficial in applications requiring accuracy in natural language understanding, such as extracting information from medical literature. By combining these properties, Rust positions itself as a competitive choice in the growing field of LLMs, especially where real-time inference and high levels of robustness are required.

Current trends in Rust for LLMs focus on bridging the gap between high-performance systems programming and the rapidly evolving needs of machine learning. Projects that combine Rust and deep learning frameworks illustrate Rust’s potential for LLM applications in distributed and edge environments. As Rust’s machine learning ecosystem continues to expand, it provides a promising alternative to traditional machine learning development environments, particularly for large-scale and real-time NLP applications. HuggingFace Candle is at the forefront of this shift, enabling developers to leverage powerful LLMs within Rust’s ecosystem, positioning Rust as a significant player in the future of machine learning infrastructure.

10.3. Loading and Using Pre-trained Models

Pre-trained models are a cornerstone of modern NLP, offering vast capabilities without requiring models to be built from scratch. Using the HuggingFace Candle crate in Rust, developers can access, load, and apply various pre-trained language models (LLMs) to a broad range of tasks. This section covers the essentials of loading pre-trained models, from model selection and initialization to leveraging transfer learning for specific tasks. Loading a pre-trained LLM with HuggingFace Candle is both efficient and flexible, enabling access to models like BERT for classification and GPT for generative tasks. This workflow allows developers to bypass extensive training processes, instead leveraging the accumulated linguistic patterns and general knowledge encoded in these models. Candle’s model loading functionalities support this by providing direct access to pre-trained weights, ensuring that the initialization process is both simple and effective.

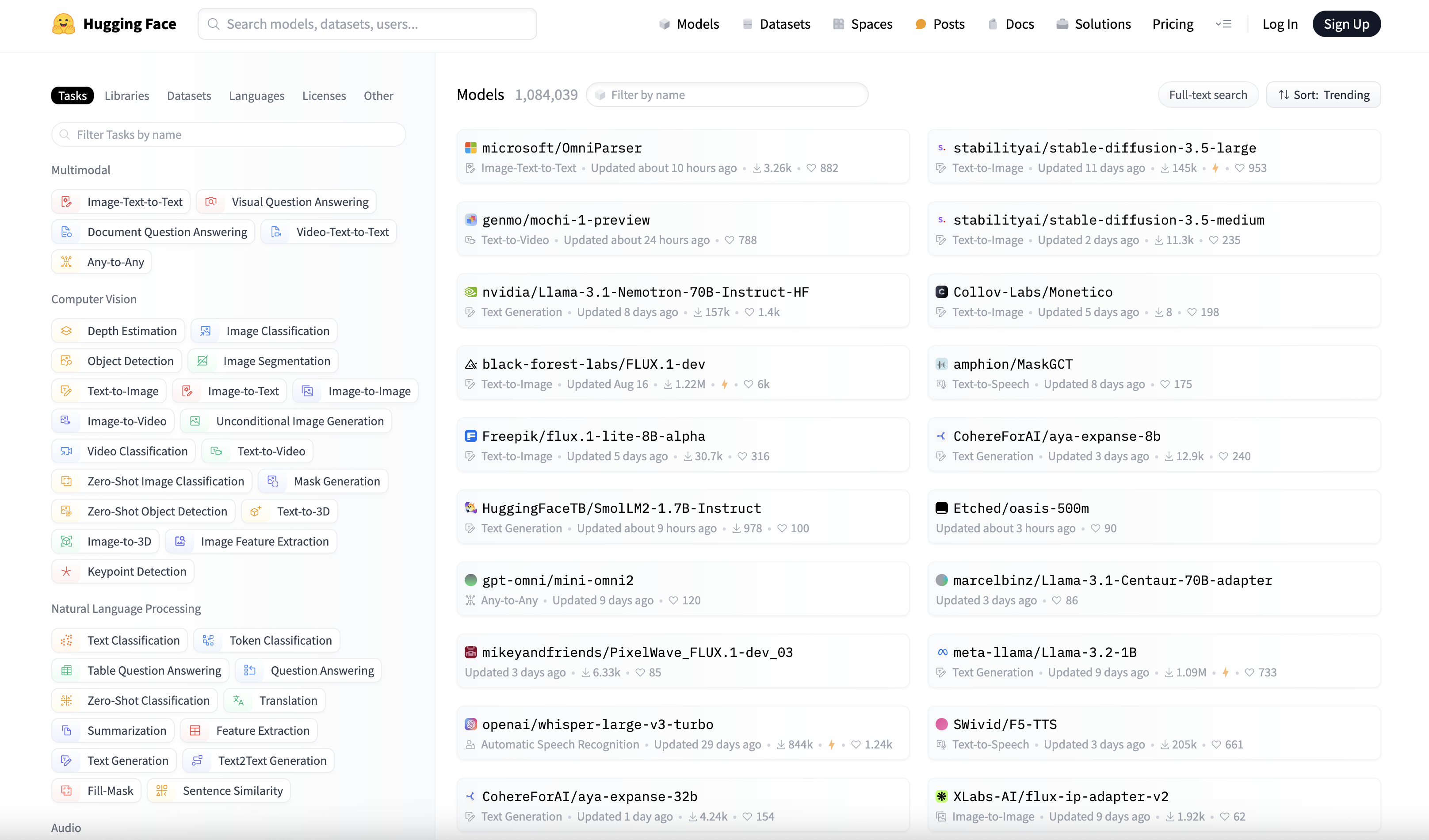

Figure 2: Hugging Face model hub.

NLP tasks often tackled with pre-trained models include text classification, sentiment analysis, and question answering, each harnessing the model's ability to process language comprehensively. For instance, classification tasks can be quickly adapted with BERT by using its pooled output layer, while sentiment analysis benefits from GPT's language comprehension to discern positive or negative sentiment in context. The primary benefit of using pre-trained models is transfer learning, where the knowledge captured in large-scale language models is adapted to new tasks with minimal tuning. This approach is not only cost-effective but also highly efficient, as it relies on previously learned language patterns, which reduces the computational burden. Transfer learning’s benefits are even more pronounced in specialized domains such as finance or healthcare, where domain-specific language use is often nuanced. By applying minimal fine-tuning to a pre-trained model, developers can create powerful NLP tools adapted to the specific vocabulary and style of the target domain.

In choosing between pre-trained and from-scratch models, developers must weigh trade-offs around time, computational cost, and task-specific requirements. While training from scratch provides full control over model behavior, the time and resources required to achieve meaningful results are often prohibitive. Pre-trained models, particularly those with architectures like Transformer-based BERT or GPT, have well-established performance on NLP tasks and are generally versatile enough to adapt with fine-tuning. Each architecture lends itself uniquely to different tasks: BERT’s bidirectional attention mechanism is particularly well-suited for tasks that require deep contextual understanding, whereas GPT’s unidirectional focus is effective for generative tasks. These distinctions help clarify the architectural role in selecting the right model for specific tasks. Pre-trained models, however, have limitations, particularly when it comes to domain adaptation and handling out-of-distribution data. Models trained on general data might struggle to capture domain-specific terminology, requiring additional fine-tuning or domain-adaptive pre-training. Additionally, out-of-distribution data remains a challenge, as models may not generalize well to completely new contexts, leading to degraded performance in unfamiliar language structures or novel vocabulary.

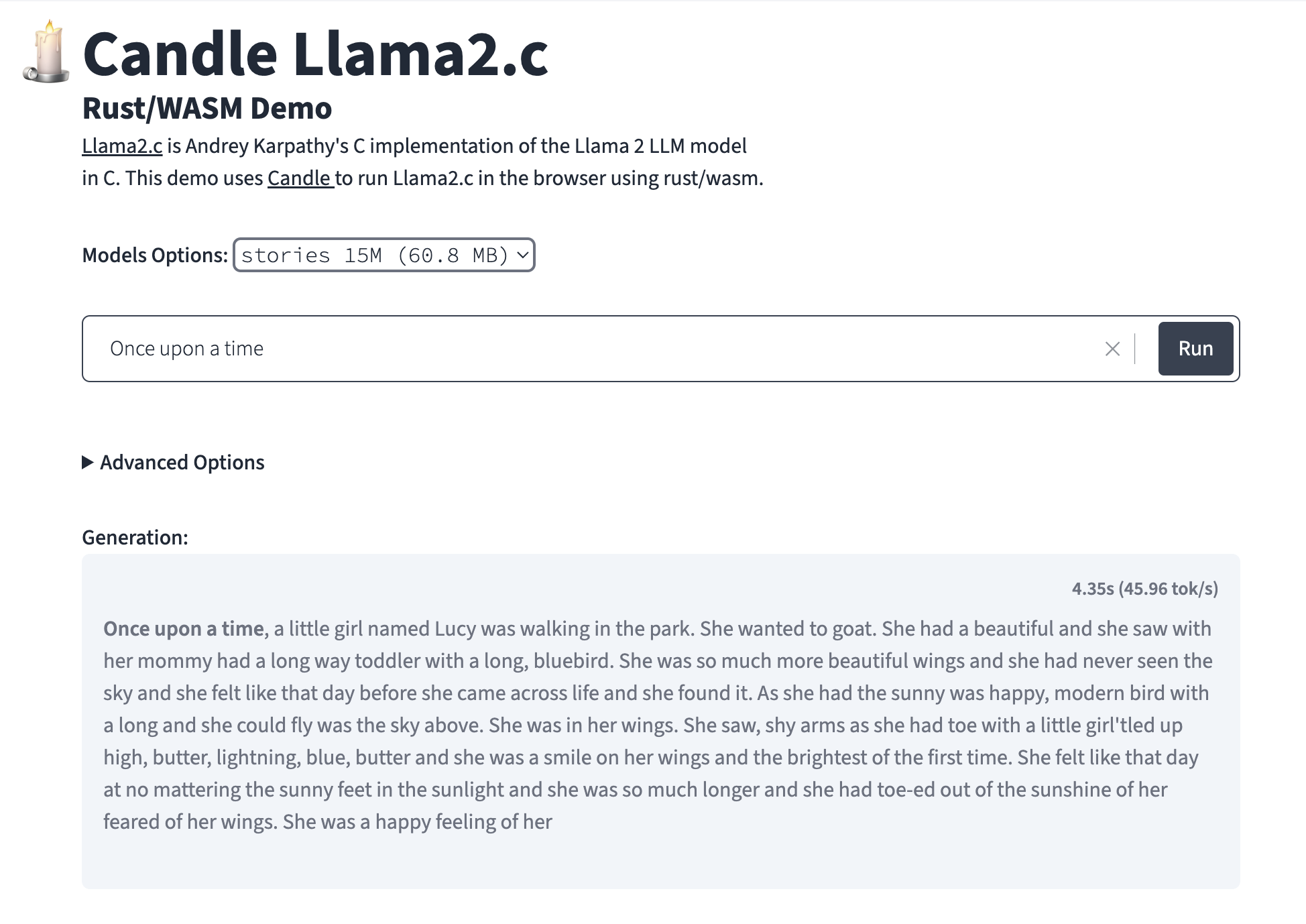

The LLaMA (Large Language Model Meta AI) series, developed by Meta AI, is designed to efficiently perform natural language processing (NLP) tasks. These models, such as the LLaMA-2-7b variant used here, focus on providing high performance with fewer parameters compared to other large language models. Optimized for both effectiveness and scalability, LLaMA models use transformer-based architectures to handle a wide range of NLP applications, from language generation to complex reasoning tasks. Their architecture allows fine-tuning on specialized data, making them adaptable for targeted tasks and efficient in deployment.

Figure 3: LLama 2 Demo at Hugging Face (Ref: https://huggingface.co/spaces/lmz/candle-llama2).

Implementing a basic Rust application that loads a pre-trained model using HuggingFace Candle and performs inference on a sample dataset provides a practical foundation for NLP with Rust. The following code illustrates the initialization of a pre-trained model and its application to a classification task. Here, a BERT-based model is loaded, tokenized input is processed, and inference is performed, demonstrating how Candle’s API facilitates model interaction.

This Rust code demonstrates how to load, configure, and use the LLaMA-2-7b model for text generation. The code first sets up necessary environment variables and downloads model configurations and tokenizer data from the Hugging Face Hub, using an API token for authorization. It initializes a transformer model with specific parameters like sampling temperature and repeat penalties, essential for controlling the generated output's diversity and coherence. The code then encodes a prompt, processes the model's logits (predictions), and outputs generated tokens, iterating through token generation until an end-of-sequence token is encountered or the specified token limit is reached. The result is a structured, token-by-token generation of text based on the initial prompt, demonstrating transformer-based language generation in Rust.

[dependencies]

tokenizers = "0.19.1"

candle-core = "0.7" # Adjust the version if necessary

candle-nn = "0.7"

candle-transformers = "0.7"

hf-hub = "0.3.2"

reqwest = { version = "0.11", features = ["blocking"] }

serde = { version = "1.0", features = ["derive"] }

serde_json = "1.0"

tracing-subscriber = "0.3"

tracing-chrome = "0.6"

anyhow = "1.0.92"

candle-examples = "0.7.2"

use anyhow::{Error as E, Result};

use candle_core::{DType, Tensor};

use candle_nn::VarBuilder;

use candle_transformers::generation::{LogitsProcessor, Sampling};

use hf_hub::{api::sync::Api, Repo, RepoType};

use std::io::Write;

use std::env; // Import env for setting environment variables

use tokenizers::Tokenizer;

use candle_examples::token_output_stream::TokenOutputStream;

use tracing_chrome::ChromeLayerBuilder;

use tracing_subscriber::prelude::*;

use candle_transformers::models::llama as model;

use model::{Llama, LlamaConfig};

const EOS_TOKEN: &str = "</s>";

const DEFAULT_PROMPT: &str = "My favorite theorem is ";

fn main() -> Result<()> {

// Set the Hugging Face API token

env::set_var("HUGGINGFACE_HUB_TOKEN", "your_huggingface_token_here");

let _guard = {

let (chrome_layer, guard) = ChromeLayerBuilder::new().build();

tracing_subscriber::registry().with(chrome_layer).init();

Some(guard)

};

// Hardcoded parameters

let cpu = true;

let temperature = 0.8;

let top_p = None;

let top_k = None;

let seed = 299792458;

let sample_len = 10000;

let no_kv_cache = false;

let prompt = Some(DEFAULT_PROMPT.to_string());

let dtype = DType::F16;

let model_id = "meta-llama/Llama-2-7b-hf".to_string();

let revision = "main".to_string();

let use_flash_attn = false;

let repeat_penalty = 1.1;

let repeat_last_n = 128;

let device = candle_examples::device(cpu)?;

let api = Api::new()?;

let api = api.repo(Repo::with_revision(model_id, RepoType::Model, revision));

// Download tokenizer

let tokenizer_url = "https://huggingface.co/hf-internal-testing/llama-tokenizer/raw/main/tokenizer.json";

let tokenizer_filename = "tokenizer.json";

std::fs::write(tokenizer_filename, reqwest::blocking::get(tokenizer_url)?.text()?)?;

let config_filename = api.get("config.json")?;

let config: LlamaConfig = serde_json::from_slice(&std::fs::read(config_filename)?)?;

let config = config.into_config(use_flash_attn);

let filenames = vec![api.get("model.safetensors")?];

let cache = model::Cache::new(!no_kv_cache, dtype, &config, &device)?;

let vb = unsafe { VarBuilder::from_mmaped_safetensors(&filenames, dtype, &device)? };

let (llama, tokenizer_filename, mut cache, _config) = (Llama::load(vb, &config)?, tokenizer_filename, cache, config);

// Load the tokenizer using tokenizers::Tokenizer

let tokenizer = Tokenizer::from_file(tokenizer_filename).map_err(E::msg)?;

// Get the EOS token ID

let eos_token_id = tokenizer.token_to_id(EOS_TOKEN).map(model::LlamaEosToks::Single);

// Encode the prompt

let prompt = prompt.as_ref().map_or(DEFAULT_PROMPT, |p| p.as_str());

let mut tokens = tokenizer.encode(prompt, true).map_err(E::msg)?.get_ids().to_vec();

// Initialize TokenOutputStream with the tokenizer

let mut token_output_stream = TokenOutputStream::new(tokenizer);

println!("starting the inference loop");

print!("{prompt}");

let mut logits_processor = {

let sampling = if temperature <= 0. {

Sampling::ArgMax

} else {

match (top_k, top_p) {

(None, None) => Sampling::All { temperature },

(Some(k), None) => Sampling::TopK { k, temperature },

(None, Some(p)) => Sampling::TopP { p, temperature },

(Some(k), Some(p)) => Sampling::TopKThenTopP { k, p, temperature },

}

};

LogitsProcessor::from_sampling(seed, sampling)

};

let mut start_gen = std::time::Instant::now();

let mut index_pos = 0;

let mut token_generated = 0;

for index in 0..sample_len {

let (context_size, context_index) = if cache.use_kv_cache && index > 0 {

(1, index_pos)

} else {

(tokens.len(), 0)

};

if index == 1 {

start_gen = std::time::Instant::now()

}

let ctxt = &tokens[tokens.len().saturating_sub(context_size)..];

let input = Tensor::new(ctxt, &device)?.unsqueeze(0)?;

let logits = llama.forward(&input, context_index, &mut cache)?;

let logits = logits.squeeze(0)?;

let logits = if repeat_penalty == 1. {

logits

} else {

let start_at = tokens.len().saturating_sub(repeat_last_n);

candle_transformers::utils::apply_repeat_penalty(

&logits,

repeat_penalty,

&tokens[start_at..],

)?

};

index_pos += ctxt.len();

let next_token = logits_processor.sample(&logits)?;

token_generated += 1;

tokens.push(next_token);

match eos_token_id {

Some(model::LlamaEosToks::Single(eos_tok_id)) if next_token == eos_tok_id => {

break;

}

Some(model::LlamaEosToks::Multiple(ref eos_ids)) if eos_ids.contains(&next_token) => {

break;

}

_ => (),

}

if let Some(t) = token_output_stream.next_token(next_token)? {

print!("{t}");

std::io::stdout().flush()?;

}

}

if let Some(rest) = token_output_stream.decode_rest().map_err(E::msg)? {

print!("{rest}");

}

let dt = start_gen.elapsed();

println!(

"\n\n{} tokens generated ({} token/s)\n",

token_generated,

(token_generated - 1) as f64 / dt.as_secs_f64(),

);

Ok(())

}

The Phi model is a family of language models developed to perform a variety of natural language processing (NLP) tasks with improved efficiency and precision. Phi models are designed with a transformer-based architecture, known for its robust handling of language understanding and generation tasks. They aim to balance model size and computational efficiency, allowing for quicker inference and lower energy consumption without compromising on accuracy. This balance makes Phi models versatile for both large-scale deployments and edge computing, where resource constraints are often a factor.

Phi models incorporate techniques such as layer normalization and attention mechanisms to capture complex language patterns, and they are often fine-tuned on diverse datasets to improve generalization across different types of text, from formal documents to conversational language. The architecture typically uses self-attention mechanisms to weigh the importance of various words or phrases in a sequence, allowing the model to build contextual representations that are critical for coherent language generation. With multiple configurations, Phi models cater to different computational needs and application scopes, making them adaptable to specific language processing challenges.

use anyhow::{Error as E, Result};

use candle_core::{DType, Tensor};

use candle_nn::VarBuilder;

use candle_transformers::generation::LogitsProcessor;

use hf_hub::{api::sync::Api, Repo, RepoType};

use std::env;

use std::io::Write; // Import `Write` trait to use `flush()`

use tokenizers::Tokenizer;

use candle_examples::token_output_stream::TokenOutputStream;

use tracing_chrome::ChromeLayerBuilder;

use tracing_subscriber::prelude::*;

use candle_transformers::models::phi::{Config as PhiConfig, Model as Phi};

const EOS_TOKEN: &str = "</s>";

const DEFAULT_PROMPT: &str = "My favorite theorem is ";

const HUGGINGFACE_HUB_TOKEN: &str = "your_huggingface_token_here";

fn main() -> Result<()> {

// Set the Hugging Face API token

env::set_var("HUGGINGFACE_HUB_TOKEN", HUGGINGFACE_HUB_TOKEN);

let _guard = {

let (chrome_layer, guard) = ChromeLayerBuilder::new().build();

tracing_subscriber::registry().with(chrome_layer).init();

Some(guard)

};

// Hardcoded parameters

let cpu = true;

let temperature = 0.8;

let top_p = None;

let seed = 299792458;

let sample_len = 5000;

let repeat_penalty = 1.1;

let repeat_last_n = 128;

let device = candle_examples::device(cpu)?;

let api = Api::new()?;

let api = api.repo(Repo::with_revision("microsoft/phi-2".to_string(), RepoType::Model, "main".to_string()));

// Download tokenizer

let tokenizer_filename = api.get("tokenizer.json")?;

let tokenizer = Tokenizer::from_file(tokenizer_filename).map_err(E::msg)?;

let config_filename = api.get("config.json")?;

let config: PhiConfig = serde_json::from_slice(&std::fs::read(config_filename)?)?;

let vb = unsafe { VarBuilder::from_mmaped_safetensors(&[api.get("model.safetensors")?], DType::F16, &device)? };

let mut model = Phi::new(&config, vb)?; // Make `model` mutable

// Initialize TokenOutputStream with the tokenizer

let mut token_output_stream = TokenOutputStream::new(tokenizer);

println!("starting the inference loop");

print!("{DEFAULT_PROMPT}");

let mut logits_processor = LogitsProcessor::new(seed, Some(temperature), top_p);

let prompt_text = DEFAULT_PROMPT.to_string();

let mut tokens = token_output_stream

.tokenizer()

.encode(&*prompt_text, true) // Dereference `prompt_text` to match expected type

.map_err(E::msg)?

.get_ids()

.to_vec();

let start_gen = std::time::Instant::now(); // Removed `mut` here as it is not needed

let mut generated_tokens = 0;

for index in 0..sample_len {

let context_size = if index > 0 { 1 } else { tokens.len() };

let ctxt = &tokens[tokens.len().saturating_sub(context_size)..];

let input = Tensor::new(ctxt, &device)?.unsqueeze(0)?;

let logits = model.forward(&input)?.squeeze(0)?;

let logits = logits.to_dtype(DType::F32)?;

let logits = if repeat_penalty == 1. {

logits

} else {

let start_at = tokens.len().saturating_sub(repeat_last_n);

candle_transformers::utils::apply_repeat_penalty(&logits, repeat_penalty, &tokens[start_at..])?

};

let next_token = logits_processor.sample(&logits)?;

tokens.push(next_token);

generated_tokens += 1;

if next_token == token_output_stream.tokenizer().token_to_id(EOS_TOKEN).ok_or(E::msg("EOS token not found"))? {

break;

}

if let Some(t) = token_output_stream.next_token(next_token)? {

print!("{t}");

std::io::stdout().flush()?; // Use `flush` to immediately display the output

}

}

let dt = start_gen.elapsed();

println!(

"\n\n{} tokens generated ({} token/s)\n",

generated_tokens,

(generated_tokens as f64 / dt.as_secs_f64())

);

Ok(())

}

In the provided code, the Phi model is instantiated for text generation tasks. The code begins by setting an API token to access Hugging Face’s model repository, followed by downloading necessary model and tokenizer files. After initializing the Phi model with specific configurations (like setting temperature for sample diversity and repeat_penalty for controlling repetition), the code processes an input prompt. Using a tokenizer, the input prompt is encoded into tokens, which the model then processes iteratively, generating one token at a time based on the logits (predictions) from previous tokens. This token generation continues until reaching the end-of-sequence token, providing a fluent and contextually coherent output based on the prompt, which is printed to the console as it generates.

Fine-tuning a pre-trained model in Rust with Candle introduces flexibility to adapt to a specific domain. The following code illustrates the fine-tuning process, which adjusts a model’s weights based on a custom dataset, making it responsive to unique language patterns. Fine-tuning is particularly beneficial in specialized domains like medical or legal text, where general models may lack the nuanced understanding required.

In this code, a BERT model is fine-tuned on a custom dataset. The Trainer instance is initialized with the model and dataset, and training proceeds over multiple epochs, adjusting model weights based on domain-specific data. Fine-tuning allows the model to retain foundational knowledge while adapting to new terminology and language patterns, making it more effective for specific tasks. This approach can significantly improve performance on domain-adaptive tasks, providing a higher degree of accuracy compared to using the model in its pre-trained state.

In industry, using pre-trained models has become a standard approach for efficient, scalable NLP applications. In financial analysis, models can be fine-tuned on datasets containing financial terminology, enabling them to process sentiment in market reports. Similarly, in the healthcare sector, models fine-tuned on medical literature offer enhanced comprehension of clinical texts. Recent trends focus on reducing model size and computational requirements through quantization and distillation techniques, making LLMs more practical for deployment in production environments. Additionally, domain-specific pre-trained models, such as BioBERT for biomedical data, have shown significant performance improvements over general models.

Rust’s high-performance capabilities, combined with HuggingFace Candle’s flexible interface for model loading and fine-tuning, create a robust environment for developing NLP applications. Through pre-trained models, developers can harness state-of-the-art language understanding with minimal setup, while Rust’s memory safety ensures efficient use of computational resources. This approach not only enhances model performance but also opens up opportunities to apply LLMs across various industries, paving the way for a new generation of high-efficiency, domain-adaptable NLP tools.

10.4. Fine-Tuning Open Foundational LLMs

Fine-tuning foundational models is crucial for adapting large language models to specific tasks or domains, enabling them to address specialized applications that general pre-trained models might not fully capture. Foundational models like GPT and BERT excel at capturing general language patterns but often require additional refinement to perform effectively in specific contexts, such as legal document analysis, financial sentiment prediction, or medical terminology processing. Fine-tuning leverages pre-existing knowledge in the foundational model by adjusting its parameters on task-specific data, retaining the advantages of transfer learning while customizing the model's output for targeted tasks. This adaptability makes fine-tuning a valuable technique, particularly in settings where linguistic nuances and domain-specific vocabulary impact the quality of predictions.

Several strategies exist for fine-tuning large-scale models. Supervised fine-tuning adjusts model parameters based on labeled data, which ensures that the model learns from clear examples relevant to the target task. Few-shot learning, on the other hand, involves training the model with a small number of task-specific examples, a valuable approach for resource-constrained scenarios. Domain-specific pre-training offers another effective strategy, where the model undergoes further pre-training on large datasets specific to a domain, such as legal or scientific texts, to capture specialized language structures before task-specific fine-tuning. Each strategy presents unique challenges, such as avoiding overfitting on smaller datasets or managing the computational resources required for large models. Overfitting can result in a model that performs well on training data but struggles with generalization, necessitating techniques like dropout regularization and early stopping to balance model complexity and robustness.

Data quality and diversity are essential in the fine-tuning process. The effectiveness of fine-tuning depends on training data that accurately reflects the language patterns of the target domain, ensuring the model learns generalizable patterns rather than memorizing specific examples. Hyperparameter tuning is another critical factor, as parameters such as learning rate, batch size, and regularization coefficients directly influence the fine-tuning process's success. For instance, a lower learning rate can prevent drastic parameter changes, preserving the foundational knowledge in the pre-trained model while allowing gradual adaptation to new data. Balancing generalization and specialization also poses trade-offs, as models fine-tuned on niche domains may perform exceptionally well on in-domain tasks but lack robustness in broader contexts. These trade-offs should be carefully considered based on the intended application.

In practice, setting up a fine-tuning pipeline in Rust with HuggingFace Candle provides a robust approach to adapting foundational models for specific tasks. The following example demonstrates fine-tuning a BERT model for sentiment analysis, implementing a custom data loader, model trainer, and evaluation framework. This pipeline includes loading the model, preparing data, adjusting hyperparameters, and executing the fine-tuning loop with regular evaluations to track model improvement.

This Rust code implements a simple sentiment analysis framework using the tch crate, which provides bindings to PyTorch. It defines a data loader for sentiment analysis data, a basic training and evaluation setup, and a neural network model. Sentiment labels are represented as an enumeration (Sentiment), which distinguishes between positive and negative sentiments. The model processes batches of data using a DataLoader struct, and a trainer struct (Trainer) facilitates the training and evaluation processes. The code uses randomly generated embeddings as placeholders for tokenized text, allowing it to simulate input data for training and testing.

[dependencies]

serde = "1.0.214"

tch = "0.12.0"

use tch::{nn, nn::OptimizerConfig, Device, Tensor, Kind};

use serde::{Serialize, Deserialize};

use std::error::Error;

// Define sentiment analysis labels

#[derive(Debug, Serialize, Deserialize, Clone)]

enum Sentiment {

Positive,

Negative,

}

// Define a custom data loader for loading sentiment analysis data

struct DataLoader {

data: Vec<(String, Sentiment)>,

batch_size: usize,

}

impl DataLoader {

fn new(data: Vec<(String, Sentiment)>, batch_size: usize) -> Self {

DataLoader { data, batch_size }

}

fn get_batch(&self, index: usize) -> Vec<(String, Sentiment)> {

let start = index * self.batch_size;

let end = std::cmp::min(start + self.batch_size, self.data.len());

self.data[start..end].to_vec()

}

fn total_batches(&self) -> usize {

(self.data.len() + self.batch_size - 1) / self.batch_size

}

}

// Placeholder for tokenization and encoding logic

fn preprocess_data(data: Vec<(String, Sentiment)>) -> Vec<(Tensor, Tensor)> {

data.iter()

.map(|(_text, sentiment)| {

// Generate a tensor with 128 features as a placeholder for tokenized text

let tokens = Tensor::randn([128], (Kind::Float, Device::Cpu)); // Adjusted to match model's input size

let label = match sentiment {

Sentiment::Positive => 1,

Sentiment::Negative => 0,

};

// Set label to Kind::Int64 to match expected type in cross-entropy

(tokens, Tensor::from(label).to_kind(Kind::Int64))

})

.collect()

}

// Define the model trainer

struct Trainer {

model: Box<dyn nn::ModuleT>,

optimizer: nn::Optimizer,

}

impl Trainer {

fn new(vs: nn::VarStore, model: Box<dyn nn::ModuleT>) -> Self {

let optimizer = nn::Adam::default().build(&vs, 1e-4).unwrap();

Trainer { model, optimizer }

}

fn train(&mut self, data_loader: &DataLoader, epochs: usize) {

for epoch in 0..epochs {

println!("Epoch: {}", epoch + 1);

for batch_idx in 0..data_loader.total_batches() {

let batch = data_loader.get_batch(batch_idx);

let (inputs, labels): (Vec<Tensor>, Vec<Tensor>) = preprocess_data(batch).into_iter().unzip();

let input_tensor = Tensor::stack(&inputs, 0);

let label_tensor = Tensor::stack(&labels, 0);

// Calculate loss without `no_grad`

let logits = self.model.forward_t(&input_tensor, true);

let loss = logits.cross_entropy_for_logits(&label_tensor);

// Backpropagation

self.optimizer.backward_step(&loss);

println!("Batch: {}, Loss: {:?}", batch_idx + 1, loss);

}

}

}

fn evaluate(&self, data_loader: &DataLoader) -> f64 {

let mut correct = 0;

let mut total = 0;

for batch_idx in 0..data_loader.total_batches() {

let batch = data_loader.get_batch(batch_idx);

let (inputs, labels): (Vec<Tensor>, Vec<Tensor>) = preprocess_data(batch).into_iter().unzip();

let input_tensor = Tensor::stack(&inputs, 0);

let label_tensor = Tensor::stack(&labels, 0);

let output = self.model.forward_t(&input_tensor, false);

let preds = output.argmax(1, true);

let batch_correct = preds.eq_tensor(&label_tensor).sum(Kind::Int64).int64_value(&[]);

correct += batch_correct;

total += label_tensor.size()[0];

}

correct as f64 / total as f64

}

}

fn main() -> Result<(), Box<dyn Error>> {

let device = Device::cuda_if_available();

let vs = nn::VarStore::new(device);

// Placeholder for model initialization

let model = nn::seq().add(nn::linear(vs.root() / "layer1", 128, 2, Default::default())); // Replace with actual model

let data = vec![

("I love this!".to_string(), Sentiment::Positive),

("This is bad.".to_string(), Sentiment::Negative),

];

let data_loader = DataLoader::new(data, 2);

// Box the model here

let mut trainer = Trainer::new(vs, Box::new(model));

// Train and evaluate the model

trainer.train(&data_loader, 5);

let accuracy = trainer.evaluate(&data_loader);

println!("Final Accuracy: {:.2}%", accuracy * 100.0);

Ok(())

}

The code operates by first initializing a model with a single linear layer, a data loader, and a trainer. During training, the Trainer iterates through the dataset in batches, computes the model’s predictions, calculates the cross-entropy loss, and updates the model parameters using backpropagation with the Adam optimizer. The evaluate function computes the model’s accuracy by comparing predictions with actual labels across all batches. In main, the program runs five epochs of training on the sample data and outputs the final accuracy, demonstrating the model's ability to learn sentiment distinctions.

Lets see other Rust code to demonstrate fine-tuning a GPT-2 model using the tch crate, a Rust binding for PyTorch. The code prepares a structure for fine-tuning GPT-2 on a text completion task by defining a data loader, data preprocessing steps, and a trainer module. It uses a simple DataLoader to handle batches of text data and a Trainer struct that manages model training and evaluation. For simplicity, the code uses randomly generated embeddings to represent tokenized text, simulating inputs that would typically be passed to a language model.

use tch::{nn, nn::OptimizerConfig, Device, Tensor, Kind};

use std::error::Error;

// Define a custom data loader for loading text completion data

struct DataLoader {

data: Vec<String>, // Input text data for fine-tuning

batch_size: usize,

}

impl DataLoader {

fn new(data: Vec<String>, batch_size: usize) -> Self {

DataLoader { data, batch_size }

}

fn get_batch(&self, index: usize) -> Vec<String> {

let start = index * self.batch_size;

let end = std::cmp::min(start + self.batch_size, self.data.len());

self.data[start..end].to_vec()

}

fn total_batches(&self) -> usize {

(self.data.len() + self.batch_size - 1) / self.batch_size

}

}

// Placeholder for tokenization and encoding logic

fn preprocess_data(data: Vec<String>) -> Vec<Tensor> {

data.iter()

.map(|_text| {

// Generate a tensor with 768 features as a placeholder for GPT-2 tokenized text

Tensor::randn([768], (Kind::Float, Device::Cpu)) // Adjusted to GPT-2's input size

})

.collect()

}

// Define the model trainer

struct Trainer {

model: Box<dyn nn::ModuleT>,

optimizer: nn::Optimizer,

}

impl Trainer {

fn new(vs: nn::VarStore, model: Box<dyn nn::ModuleT>) -> Self {

let optimizer = nn::Adam::default().build(&vs, 1e-4).unwrap();

Trainer { model, optimizer }

}

fn train(&mut self, data_loader: &DataLoader, epochs: usize) {

for epoch in 0..epochs {

println!("Epoch: {}", epoch + 1);

for batch_idx in 0..data_loader.total_batches() {

let batch = data_loader.get_batch(batch_idx);

let inputs: Vec<Tensor> = preprocess_data(batch);

let input_tensor = Tensor::stack(&inputs, 0);

// Forward pass through GPT-2

let logits = self.model.forward_t(&input_tensor, true);

// Dummy target for cross-entropy (randomly generated labels)

let target = Tensor::randint(50257, &[inputs.len() as i64], (Kind::Int64, Device::Cpu));

// Calculate cross-entropy loss between generated and target

let loss = logits.cross_entropy_for_logits(&target);

// Backpropagation

self.optimizer.backward_step(&loss);

println!("Batch: {}, Loss: {:?}", batch_idx + 1, loss);

}

}

}

fn evaluate(&self, data_loader: &DataLoader) -> f64 {

let mut total_loss = 0.0;

let mut batch_count = 0;

for batch_idx in 0..data_loader.total_batches() {

let batch = data_loader.get_batch(batch_idx);

let inputs: Vec<Tensor> = preprocess_data(batch);

let input_tensor = Tensor::stack(&inputs, 0);

let logits = self.model.forward_t(&input_tensor, false);

// Dummy target for evaluation

let target = Tensor::randint(50257, &[inputs.len() as i64], (Kind::Int64, Device::Cpu));

let loss = logits.cross_entropy_for_logits(&target);

total_loss += loss.double_value(&[]);

batch_count += 1;

}

total_loss / batch_count as f64

}

}

fn main() -> Result<(), Box<dyn Error>> {

let device = Device::cuda_if_available();

let vs = nn::VarStore::new(device);

// Placeholder for GPT-2 model initialization (replace with actual GPT-2 model)

let model = nn::seq().add(nn::linear(vs.root() / "layer1", 768, 50257, Default::default())); // Replace with actual model

// Sample data

let data = vec![

"The quick brown fox".to_string(),

"GPT-2 is an advanced language model".to_string(),

];

let data_loader = DataLoader::new(data, 2);

// Box the model here

let mut trainer = Trainer::new(vs, Box::new(model));

// Train and evaluate the model

trainer.train(&data_loader, 3);

let average_loss = trainer.evaluate(&data_loader);

println!("Average Loss: {:.2}", average_loss);

Ok(())

}

The program operates by initializing a GPT-2-like model with a placeholder single linear layer and training it over several epochs. During training, the Trainer struct iterates through data batches, calculates the model's output, and computes cross-entropy loss against randomly generated target tokens as placeholders. The optimizer updates the model weights to minimize the loss, simulating a fine-tuning process. After training, the evaluate method computes the average loss across the dataset, giving an indication of the model's performance. This code provides a structural outline for fine-tuning a language model in Rust.

Below is the Rust code to demonstrate a structure for fine-tuning a LLaMA 2-like language model using the tch crate, a Rust wrapper for PyTorch. It is designed to simulate text completion by setting up a data loader, a preprocessing function for input text, and a training and evaluation pipeline. The DataLoader manages batches of text data, while a simple linear layer simulates the LLaMA 2 model's output layer. The code uses randomly generated embeddings as placeholders for tokenized text, preparing the model to handle text data in batches.

use tch::{nn, nn::OptimizerConfig, Device, Tensor, Kind};

use std::error::Error;

// Define a custom data loader for loading text completion data

struct DataLoader {

data: Vec<String>, // Input text data for fine-tuning

batch_size: usize,

}

impl DataLoader {

fn new(data: Vec<String>, batch_size: usize) -> Self {

DataLoader { data, batch_size }

}

fn get_batch(&self, index: usize) -> Vec<String> {

let start = index * self.batch_size;

let end = std::cmp::min(start + self.batch_size, self.data.len());

self.data[start..end].to_vec()

}

fn total_batches(&self) -> usize {

(self.data.len() + self.batch_size - 1) / self.batch_size

}

}

// Placeholder for tokenization and encoding logic

fn preprocess_data(data: Vec<String>) -> Vec<Tensor> {

data.iter()

.map(|_text| {

// Generate a tensor with 1024 features as a placeholder for LLaMA 2 tokenized text

Tensor::randn([1024], (Kind::Float, Device::Cpu)) // Adjusted to LLaMA 2's input size

})

.collect()

}

// Define the model trainer

struct Trainer {

model: Box<dyn nn::ModuleT>,

optimizer: nn::Optimizer,

}

impl Trainer {

fn new(vs: nn::VarStore, model: Box<dyn nn::ModuleT>) -> Self {

let optimizer = nn::Adam::default().build(&vs, 1e-4).unwrap();

Trainer { model, optimizer }

}

fn train(&mut self, data_loader: &DataLoader, epochs: usize) {

for epoch in 0..epochs {

println!("Epoch: {}", epoch + 1);

for batch_idx in 0..data_loader.total_batches() {

let batch = data_loader.get_batch(batch_idx);

let inputs: Vec<Tensor> = preprocess_data(batch);

let input_tensor = Tensor::stack(&inputs, 0);

// Forward pass through LLaMA 2

let logits = self.model.forward_t(&input_tensor, true);

// Dummy target for cross-entropy (randomly generated labels)

let target = Tensor::randint(32000, &[inputs.len() as i64], (Kind::Int64, Device::Cpu));

// Calculate cross-entropy loss between generated and target

let loss = logits.cross_entropy_for_logits(&target);

// Backpropagation

self.optimizer.backward_step(&loss);

println!("Batch: {}, Loss: {:?}", batch_idx + 1, loss);

}

}

}

fn evaluate(&self, data_loader: &DataLoader) -> f64 {

let mut total_loss = 0.0;

let mut batch_count = 0;

for batch_idx in 0..data_loader.total_batches() {

let batch = data_loader.get_batch(batch_idx);

let inputs: Vec<Tensor> = preprocess_data(batch);

let input_tensor = Tensor::stack(&inputs, 0);

let logits = self.model.forward_t(&input_tensor, false);

// Dummy target for evaluation

let target = Tensor::randint(32000, &[inputs.len() as i64], (Kind::Int64, Device::Cpu));

let loss = logits.cross_entropy_for_logits(&target);

total_loss += loss.double_value(&[]);

batch_count += 1;

}

total_loss / batch_count as f64

}

}

fn main() -> Result<(), Box<dyn Error>> {

let device = Device::cuda_if_available();

let vs = nn::VarStore::new(device);

// Placeholder for LLaMA 2 model initialization (replace with actual LLaMA 2 model)

let model = nn::seq().add(nn::linear(vs.root() / "layer1", 1024, 32000, Default::default())); // Replace with actual model

// Sample data

let data = vec![

"The quick brown fox".to_string(),

"LLaMA 2 is an advanced language model".to_string(),

];

let data_loader = DataLoader::new(data, 2);

// Box the model here

let mut trainer = Trainer::new(vs, Box::new(model));

// Train and evaluate the model

trainer.train(&data_loader, 3);

let average_loss = trainer.evaluate(&data_loader);

println!("Average Loss: {:.2}", average_loss);

Ok(())

}

The code operates by first initializing a simulated model and optimizer, then iterating through data batches to calculate predictions and compute cross-entropy loss against randomly generated targets. During training, the Trainer struct handles backpropagation, adjusting model weights based on the calculated loss. After each epoch, the evaluate function computes the average loss, providing a measure of the model's performance on the training data. This outline demonstrates the process of fine-tuning a language model in Rust and provides a structural template for integrating real pre-trained models and tokenization in future implementations.

Experimenting with different fine-tuning strategies and hyperparameter configurations can yield significant insights into model performance. For instance, adjusting batch size can impact memory use and convergence rates, while varying the learning rate can influence the model’s adaptability. To better understand the model’s versatility, we evaluate its performance on both in-domain (sentiment analysis) and out-of-domain (general text) datasets. Evaluating the fine-tuned model on out-of-domain data provides insight into its generalization capabilities, revealing if the model has become overly specialized.

Fine-tuning has found extensive use across industries. In finance, sentiment analysis models fine-tuned on industry-specific language can aid in interpreting investor sentiment from financial news and social media posts. In healthcare, foundational models fine-tuned on clinical text corpora have significantly improved information retrieval and classification accuracy, particularly in medical literature analysis. Recent trends in fine-tuning emphasize resource efficiency and cross-domain adaptability. For example, parameter-efficient fine-tuning techniques, such as adapters and LoRA (Low-Rank Adaptation), enable models to be fine-tuned with a smaller set of parameters, reducing computational demands while maintaining performance. Additionally, mixed-domain fine-tuning trains models across multiple related domains to improve generalization, creating robust models capable of performing consistently across a wide range of contexts.

Using Rust with HuggingFace Candle to fine-tune LLMs offers a high-performance, memory-efficient approach to specialized NLP model training. Rust’s memory safety guarantees, combined with Candle’s flexible model-loading capabilities, provide developers with a secure, efficient platform for fine-tuning, reducing the risk of memory-related issues common in large-scale model training. By implementing a fine-tuning pipeline as illustrated, developers can adapt foundational LLMs to meet the specific requirements of diverse domains, creating highly customized, powerful NLP tools for industry applications. Through the exploration of various fine-tuning strategies and performance optimizations, Rust provides a solid foundation for deploying fine-tuned LLMs, enabling scalable, efficient NLP solutions across a range of industries.

10.5. Deploying LLMs Using Rust and Candle

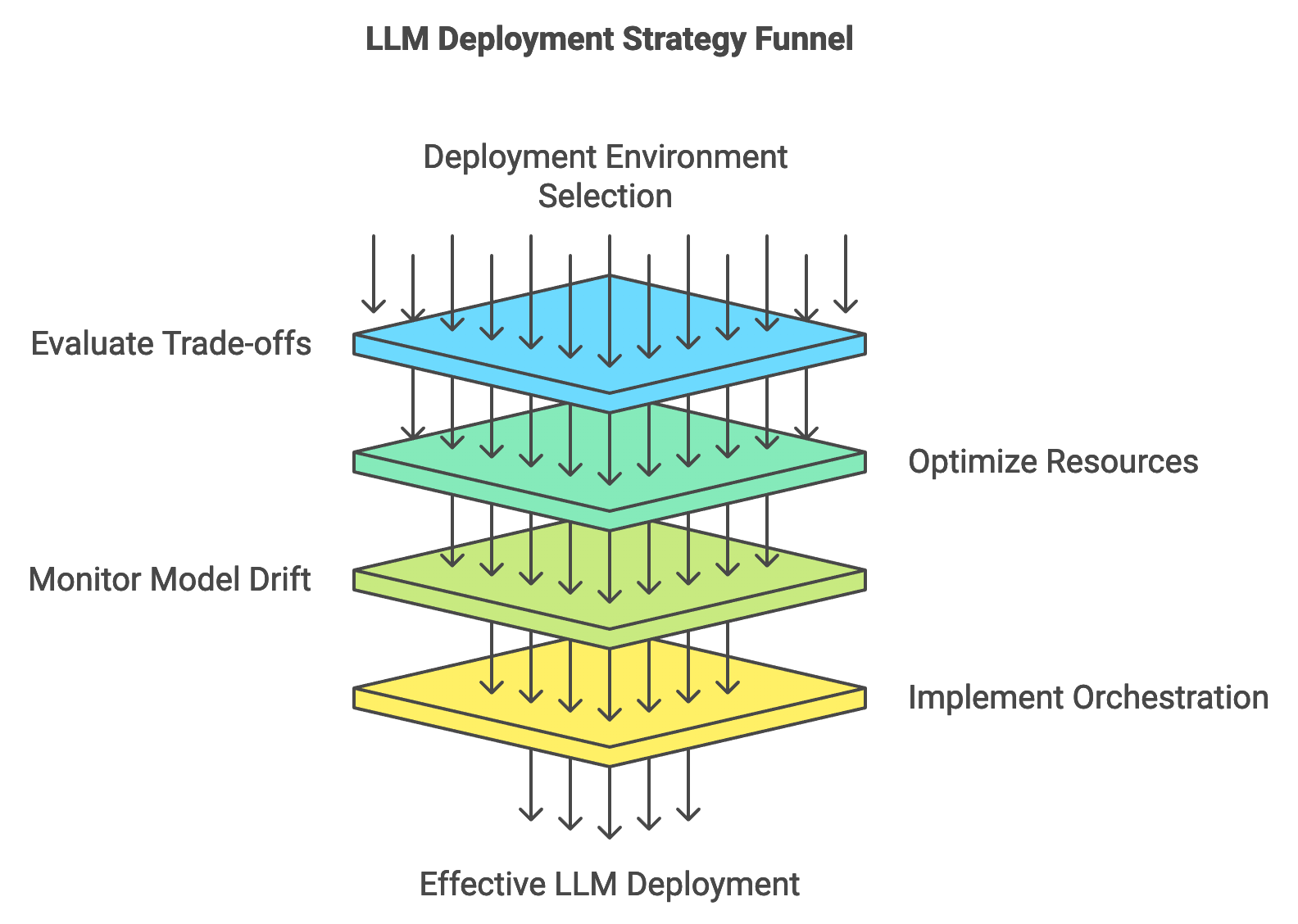

Deploying large language models (LLMs) into production environments presents a unique set of challenges, requiring careful planning around scalability, latency, and resource efficiency. The deployment of LLMs often entails balancing computational demands with performance requirements, particularly in environments with limited resources or strict latency constraints. A successful deployment strategy for LLMs involves selecting the appropriate deployment environment—whether on-premises, cloud-based, or edge—each offering different trade-offs between scalability, control, and cost. On-premises deployments provide data privacy and control, whereas cloud-based solutions offer scalability and reduced maintenance overhead. Edge deployments are ideal for low-latency applications where data processing must occur close to the user, minimizing the lag caused by data transfer to remote servers. In each case, resource optimization and deployment efficiency are crucial to ensuring the model performs consistently and reliably under real-world conditions.

Figure 4: Process of deploying LLM.

Monitoring and maintaining deployed LLMs is essential, as model drift—a shift in model performance over time due to changing data distributions—can impact accuracy. Regular updates and retraining with new data can mitigate model drift, ensuring that the model remains relevant and performs accurately in production. The use of orchestration tools like Docker and Kubernetes is particularly advantageous in LLM deployment, as these tools facilitate containerization, scaling, and lifecycle management of deployed models. Containers enable consistent environments across different systems, simplifying deployment and maintenance. Kubernetes, in particular, automates load balancing, monitoring, and scaling, making it suitable for high-demand applications that rely on LLMs. By leveraging these tools, developers can ensure the efficient handling of resources, model stability, and performance consistency in production environments.

Each deployment environment comes with inherent trade-offs. Edge deployments, while advantageous for low-latency applications, may lack the computational power of cloud-based environments. Cloud-based deployments, on the other hand, are ideal for scaling as they provide access to flexible computing resources, which can be adapted to accommodate surges in demand. However, cloud environments can incur higher operational costs, and data transmission latency might hinder real-time processing requirements. When choosing a deployment environment, factors such as cost, model performance, and user experience must be carefully evaluated to align with the application’s specific requirements. For instance, deploying an LLM for interactive customer service might prioritize low latency and user experience, favoring edge deployment, whereas an LLM used in a data-intensive backend analysis might benefit more from cloud scalability and resource availability.

Implementing a Rust-based deployment pipeline for an LLM using HuggingFace Candle enables a performant, low-latency solution optimized for model serving. The example below demonstrates a REST API setup for model inference, where the model is hosted as a service, allowing external applications to send requests for predictions. Rust’s efficiency and low-level control allow for fast response times, which are especially beneficial in production deployments. This code is a Rust-based REST API for a BERT model, allowing text predictions through a /predict endpoint. The API, built with Rocket, utilizes the candle library for model inference and tokenizers for text tokenization. It downloads the model and tokenizer from Hugging Face’s Hub, processes input text, runs it through the model, and returns a decoded response.

[dependencies]

tokenizers = "0.19.1"

candle-core = "0.7" # Adjust the version if necessary

candle-nn = "0.7"

candle-transformers = "0.7"

hf-hub = "0.3.2"

reqwest = { version = "0.11", features = ["blocking"] }

serde = { version = "1.0", features = ["derive"] }

serde_json = "1.0"

tracing-subscriber = "0.3"

tracing-chrome = "0.6"

anyhow = "1.0.92"

candle-examples = "0.7.2"

tokio = { version = "1", features = ["full"] }

rocket = { version = "0.5.1", features = ["json"] }

use candle_core::{Device, DType, Tensor};

use candle_nn::VarBuilder;

use candle_transformers::models::bert::{BertModel as Model, Config};

use hf_hub::api::sync::Api;

use rocket::{get, routes, Rocket, State};

use rocket::serde::json::Json;

use serde::Deserialize;

use std::sync::Mutex;

use tokenizers::Tokenizer;

use anyhow::{Error, Context};

#[derive(Deserialize)]

struct RequestBody {

input_text: String,

}

#[get("/predict", data = "<body>")]

async fn predict(

body: Json<RequestBody>,

model: &State<Mutex<Model>>,

tokenizer: &State<Mutex<Tokenizer>>,

) -> Result<Json<String>, rocket::http::Status> {

let encoding = tokenizer.lock().unwrap()

.encode(&*body.input_text, true)

.map_err(|_| rocket::http::Status::InternalServerError)?;

let input_ids = Tensor::from_slice(

encoding.get_ids(),

&[encoding.get_ids().len()],

&Device::Cpu,

).map_err(|_| rocket::http::Status::InternalServerError)?;

let attention_mask = Tensor::ones(

&[1, encoding.get_ids().len()],

DType::U32,

&Device::Cpu,

).map_err(|_| rocket::http::Status::InternalServerError)?;

// Forward pass

let logits = model

.lock()

.unwrap()

.forward(&input_ids, &attention_mask, None)

.map_err(|_| rocket::http::Status::InternalServerError)?;

// Convert logits to a single scalar value and wrap in a Vec<u32>

let single_id: u32 = logits

.to_scalar::<u32>()

.map_err(|_| rocket::http::Status::InternalServerError)?;

let output_ids = vec![single_id];

// Decode the output IDs to get the final text response

let response = tokenizer

.lock()

.unwrap()

.decode(&output_ids, true)

.map_err(|_| rocket::http::Status::InternalServerError)?;

Ok(Json(response))

}

fn create_api(model: Model, tokenizer: Tokenizer) -> Rocket<rocket::Build> {

rocket::build()

.manage(Mutex::new(model))

.manage(Mutex::new(tokenizer))

.mount("/", routes![predict])

}

async fn download_model() -> Result<(Model, Tokenizer), Error> {

// Set up Hugging Face API client

let api = Api::new()?;

let model_repo = "bert-base-uncased"; // Replace with your chosen model

// Download model weights and tokenizer

let weights_path = api.model(model_repo.to_string()).get("pytorch_model.bin")?;

let tokenizer_path = api.model(model_repo.to_string()).get("tokenizer.json")?;

// Load the tokenizer with custom error handling

let tokenizer = Tokenizer::from_file(&tokenizer_path)

.map_err(|e| Error::msg(format!("Failed to load tokenizer: {}", e)))?;

// Read the weights file into a Vec<u8>

let weights_data = std::fs::read(&weights_path)

.context("Failed to read model weights")?;

// Initialize VarBuilder with BufferedSafetensors as backend

let backend = Box::new(candle_core::safetensors::BufferedSafetensors::new(weights_data)?);

let var_builder = VarBuilder::new_with_args(backend, DType::F32, &Device::Cpu);

// Create Config for the model

let config = Config::default();

// Load the model with config

let model = Model::load(var_builder, &config)?;

Ok((model, tokenizer))

}

#[rocket::main]

async fn main() -> Result<(), Box<dyn std::error::Error>> {

let (model, tokenizer) = download_model().await?;

create_api(model, tokenizer).launch().await?;

Ok(())

}

When a request is sent to the /predict endpoint with input text, the tokenizer first converts the text into input IDs. These IDs, along with an attention mask, are fed into the BERT model’s forward method to obtain the logits tensor. If the output tensor contains only a single element, it is extracted as a scalar, wrapped in a vector, and then decoded back into text using the tokenizer. The decoded result is returned as the JSON response. The main function initializes the API by downloading the model and tokenizer, setting up Rocket to manage them as state for each request.

Experimenting with different deployment strategies, such as deploying on a cloud platform versus running the model on edge devices, provides valuable insights into trade-offs in performance and cost. Deploying on a cloud platform like AWS or Google Cloud allows for scalable resources, making it suitable for applications with variable traffic. Edge deployment, however, requires efficient optimization techniques to handle model inference on lower-power devices, such as model quantization, which reduces memory and compute requirements without significantly compromising performance. These strategies empower developers to align deployment configurations with application constraints, balancing efficiency and accessibility.

Monitoring the deployed model’s performance is essential to maintaining its effectiveness in production. Metrics such as inference latency, memory usage, and model accuracy should be regularly analyzed to ensure the model meets the application’s performance requirements. Rust’s built-in profiling and monitoring tools, such as tracing and tokio-metrics, facilitate tracking performance metrics. By continuously evaluating the model's inference times, developers can address potential bottlenecks, ensuring that the deployment remains responsive. Furthermore, periodic accuracy evaluations using representative data samples help identify model drift, alerting developers to retrain the model when necessary.

Industry use cases for deploying LLMs span various fields, from real-time sentiment analysis in financial services to customer service automation in e-commerce. For instance, financial institutions might deploy LLMs on cloud platforms to analyze high volumes of social media and news data, providing near-real-time insights into market sentiment. In contrast, customer service applications deployed on edge devices enable real-time, low-latency interactions, enhancing user experience by providing instant responses. Recent trends focus on optimizing deployment strategies to handle high-demand environments, with techniques like model pruning and low-rank adaptation becoming increasingly popular. These techniques, when combined with Rust’s efficient deployment pipeline, offer performance improvements and resource savings.

Deploying LLMs with Rust and HuggingFace Candle offers a powerful, low-overhead approach to serving language models in production environments. Rust’s control over system resources, combined with Candle’s flexible model-loading capabilities, creates a stable, efficient foundation for scaling LLM applications. By implementing deployment strategies that include containerization, cloud optimization, and edge computing, developers can tailor deployment environments to the unique requirements of their applications. This comprehensive approach to deployment, from initial setup to monitoring, provides a reliable way to integrate LLMs into production, ensuring that models operate optimally and adapt to evolving application demands.

10.6. Extending and Customizing LLMs with Candle

Open foundational LLMs provide a flexible framework that can be adapted to meet specific needs, offering immense potential for customization to address unique applications and expand functionality. Through HuggingFace Candle, developers can modify these models by adding new layers, adjusting attention mechanisms, or integrating additional modalities—such as combining text with image data. This flexibility allows the model to handle more complex tasks or adapt to specialized fields like multimodal analysis, where the model processes both visual and textual information simultaneously. Transfer learning further supports this customization by leveraging pre-trained knowledge as a foundation, which is extended with additional training data and tasks that enhance model specificity without the need for exhaustive, from-scratch training. This combined approach enables developers to push the boundaries of foundational LLMs, tailoring them to meet emerging demands in fields like healthcare, finance, and autonomous systems.

Customizing LLMs brings practical implications for computational demands and interpretability. Architectural changes, like adding layers or altering the attention mechanism, increase model complexity and, subsequently, training time and memory requirements. Each additional layer adds parameters to the model, which enhances its capacity to capture intricate patterns but also demands greater computational resources. Likewise, modifying the attention mechanism can impact the model’s ability to understand relationships between input tokens, which may either refine or diminish its interpretability. Balancing the depth and functionality of modifications with their impact on computational efficiency is essential. For instance, while deeper models often perform better on complex tasks, they require specialized hardware and increased training times. Maintaining model robustness is also critical, as introducing significant changes might disrupt learned patterns, reducing the model's accuracy. With any customization, careful evaluation of robustness and model stability is necessary to ensure reliable performance in production.

In terms of ethical considerations, model customization requires sensitivity to fairness and bias issues, particularly as tailored models often operate within specific, potentially biased domains. Customizations should be conducted with a focus on ensuring that model outputs remain fair and representative across diverse inputs. When models are customized for sensitive applications, such as predictive hiring tools or financial recommendation engines, rigorous assessments should be made to detect and mitigate biases introduced by task-specific data. By implementing fairness checks during customization, developers can ensure ethical deployment and maintain the integrity of AI applications.

Building a custom LLM in Rust with HuggingFace Candle involves modifying a pre-trained model to include additional layers or functional elements. In the following example, we demonstrate how to extend a foundational LLM by adding a custom layer that adjusts the output embeddings, enhancing the model’s performance on a complex language task.